Analytics

Compute

Containers

Customer Engagement

Database

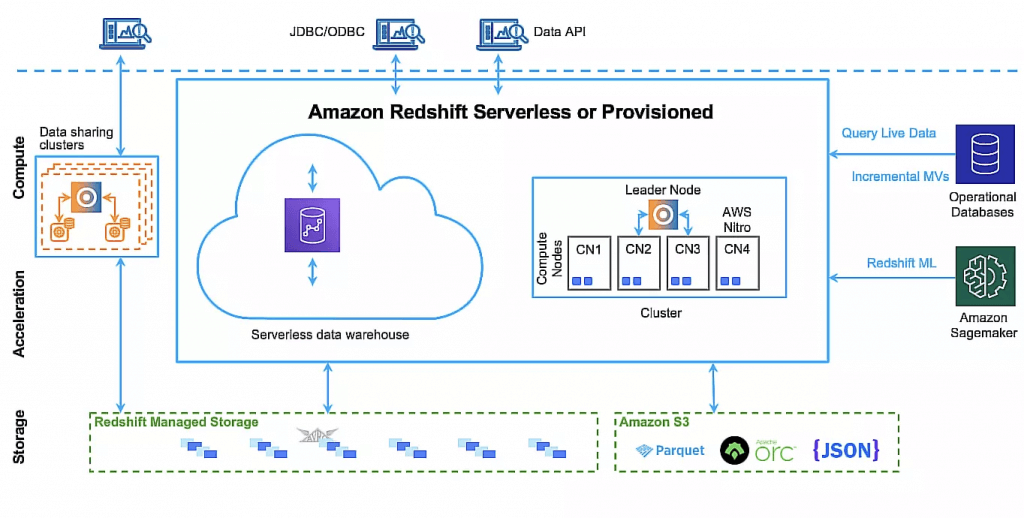

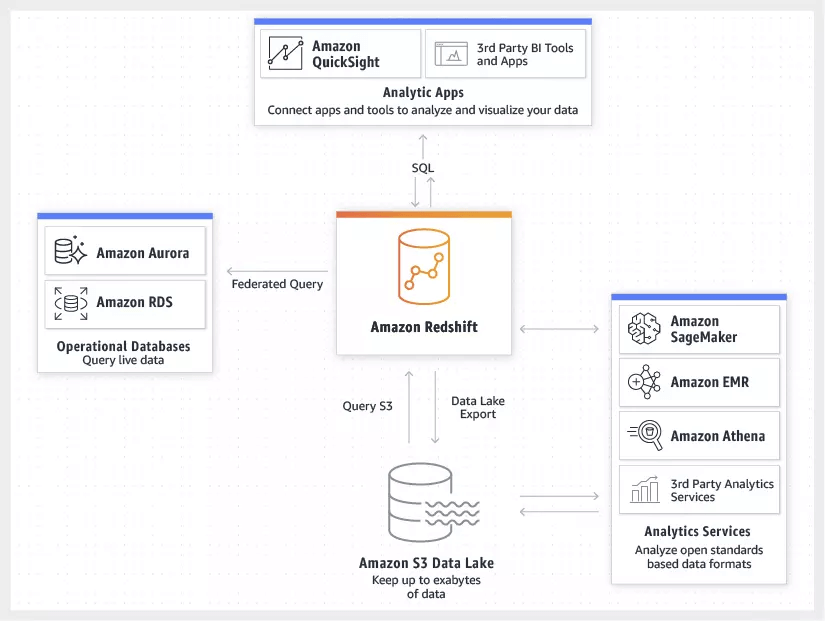

Amazon Redshift

- a fast, fully managed, petabyte-scale data warehouse service that makes it simple and cost-effective to efficiently analyze all your data

- uses Massively Parallel Processing (MPP) technology to process massive volumes of data at lightning speeds

Dev Tools

Machine Learning

Management and Governance

Networking and Content Delivery

Storage

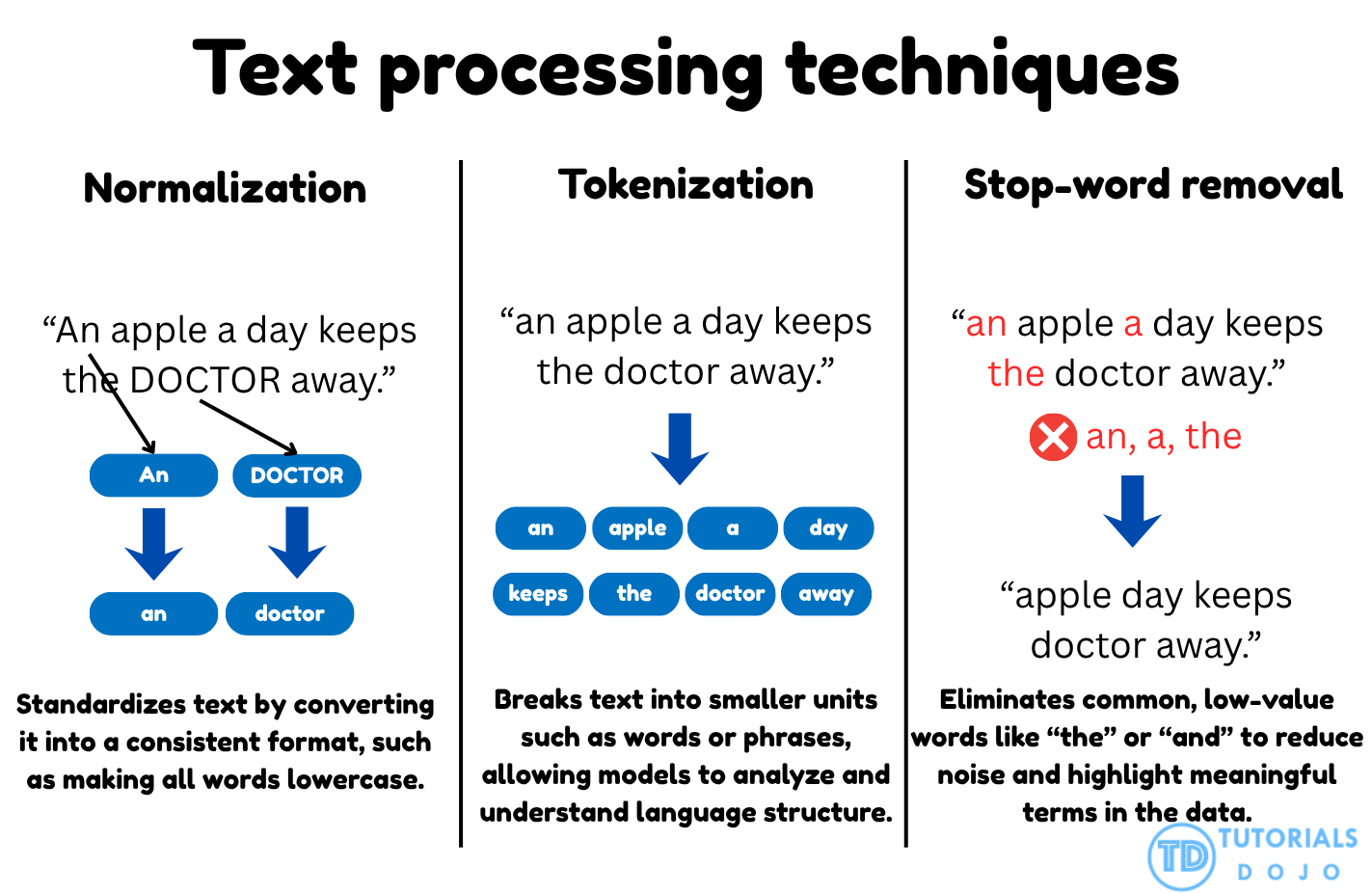

Preparing textual data for machine learning involves preprocessing steps that transform raw text into a clean, structured format. Real-world data often contains inconsistencies such as mixed casing, redundant words, and noise that can hinder model performance. Standardizing and cleaning the text helps models capture meaningful semantic relationships, improving accuracy, interpretability, and consistency across NLP workflows.

Text normalization and tokenization are two core preprocessing techniques that work together to structure textual data for natural language processing tasks. Normalization ensures uniformity by converting all text into a consistent format, typically by transforming every word to lowercase. This prevents words such as Apple, APPLE, and apple from being treated as separate tokens, which can fragment the vocabulary and distort semantic understanding. Once text is normalized, tokenization divides sentences into individual word units, enabling models to analyze relationships and co-occurrence patterns between words. In models like Word2Vec, this structured segmentation allows the algorithm to learn the contextual dependencies that define meaning. Together, these steps form the foundation for clean, consistent, and interpretable text data that supports high-quality embedding generation.

Stop-word removal is another essential preprocessing technique that filters out commonly used words that add little or no semantic value to a model’s understanding of language. Words like the, and, is, and of occur frequently across documents and can obscure meaningful patterns in the data. By eliminating these non-informative tokens using a stop-word dictionary, the dataset becomes more concise and focused on the content-bearing terms that drive model learning. This reduction in noise improves embedding clarity and computational efficiency during training.

When combined, these preprocessing techniques create a structured and optimized dataset that enhances the performance of NLP models. Text normalization ensures consistency, tokenization enables contextual learning, and stop-word removal refines the dataset by emphasizing meaningful terms. Together, they serve as the cornerstone of high-quality data preparation for embedding-based approaches, ensuring that models learn from the true linguistic and semantic essence of text data.

Choose Vector Store

- SharePoint / Confluence / document permissions / “must respect existing ACLs” → Kendra

- Graph relationships (“who knows who”, fraud rings, lineage, dependency graphs) → Neptune Analytics

- Already on Postgres + need joins/transactions + vector search → Aurora + pgvector

- Huge vector corpus + cost pressure + vectors mostly tied to S3 objects → S3 Vectors

- Need full search platform + lots of tuning control → OpenSearch managed

- Unpredictable traffic + “minimize ops” → OpenSearch Serverless

- Cost-first: S3 Vectors (massive corpora, infrequent queries)

- Permissions-first: Kendra

- Relationship-first: Neptune Analytics

- SQL-first: Aurora + pgvector

- Search-first: OpenSearch (managed/serverless)

| Service | Primary Use Case | Key Advantage |

|---|---|---|

| Amazon OpenSearch Service | Advanced search & high-throughput analytics | Recommended for Amazon Bedrock; scales horizontally with built-in k-NN. |

| Amazon S3 Vectors | Large-scale, cost-effective storage (New/2025) | Native vector support in S3 buckets; up to 90% cheaper than in-memory options for infrequent access. |

| Amazon Aurora (pgvector) | Relational data + vector search | Best for hybrid structured/unstructured data; uses existing PostgreSQL tools and SQL queries. |

| Amazon MemoryDB | Ultra-low latency | Fastest vector search performance on AWS with high recall for real-time applications. |

| Amazon Neptune Analytics | Graph-based vector search | Ideal for RAG applications involving complex relationship data. |

| Feature | OpenSearch Service (Managed) | OpenSearch Serverless |

|---|---|---|

| Management | Manual provisioning of nodes/clusters. | Automated provisioning; no clusters to manage. |

| Scaling | Manual or policy-based auto-scaling of instances. | Automatic scaling based on demand (OCUs). |

| Latency | Predictable | Variable |

| Cost Basis | Pay per instance, storage (EBS), and data transfer. | Pay per OpenSearch Capacity Unit (OCU) used. |

| Tuning | Full control (shards) | Limited |