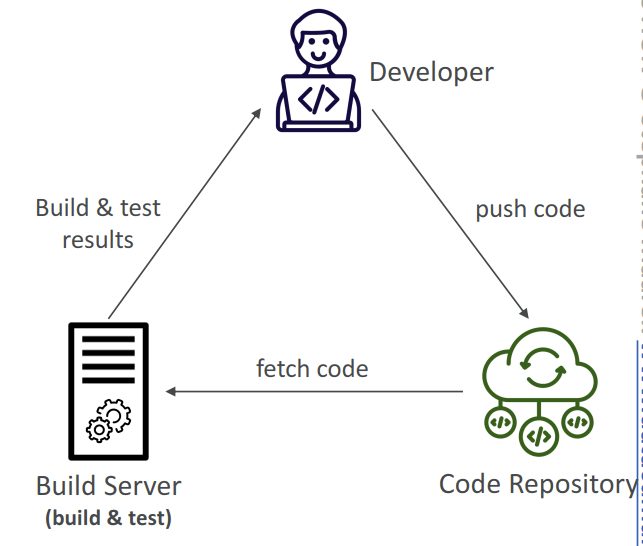

Continuous Integration (CI)

- Developers push the code to a code repository often (e.g., GitHub, CodeCommit, Bitbucket…)

- A testing / build server checks the code as soon as it’s pushed (CodeBuild, Jenkins CI…)

- The developer gets feedback about the tests and checks that have passed / failed

- Find bugs early, then fix bugs

- Deliver faster as the code is tested

- Deploy often

- Happier developers, as they’re unblocked

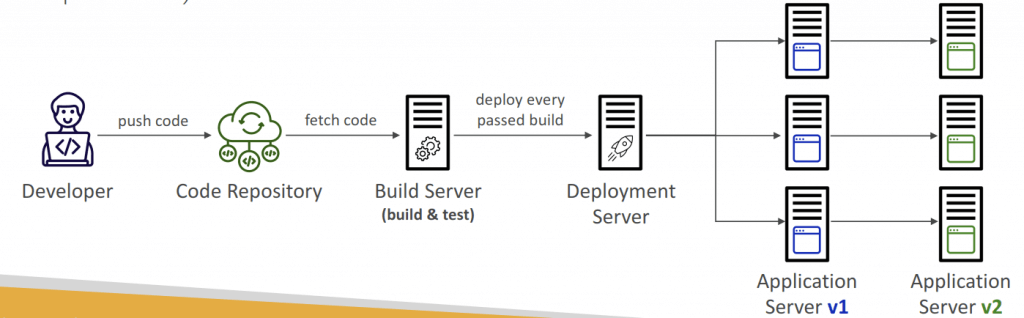

Continuous Delivery (CD)

- Ensures that the software can be released reliably whenever needed

- Ensures deployments happen often and are quick

- Shift away from “one release every 3 months” to ”5 releases a day”

- That usually means automated deployment (e.g., CodeDeploy, Jenkins CD, Spinnaker…)

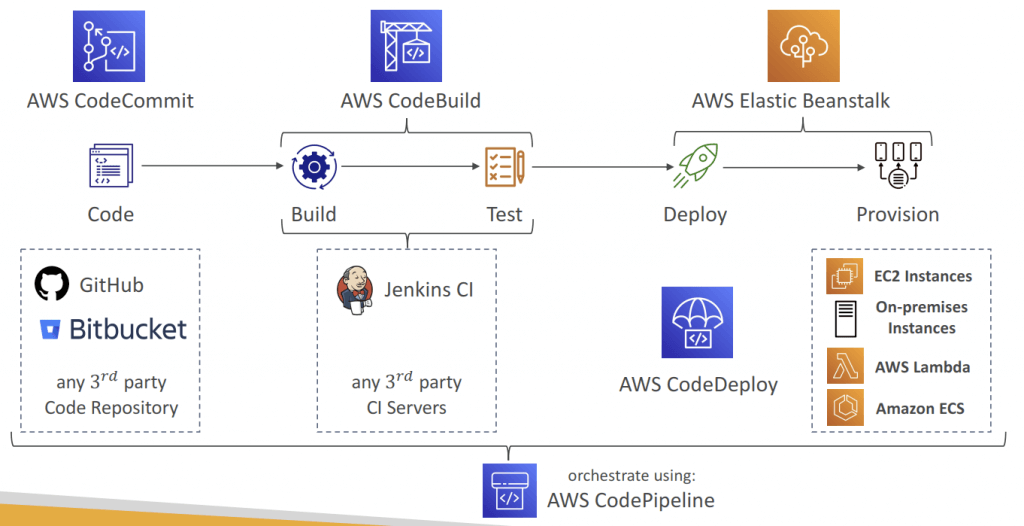

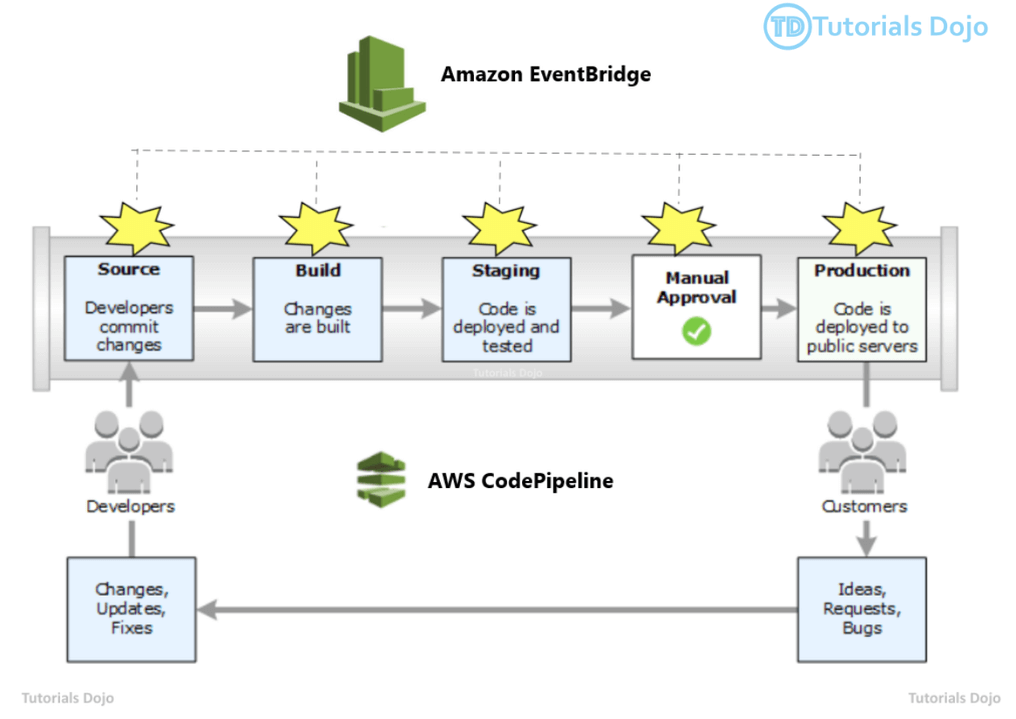

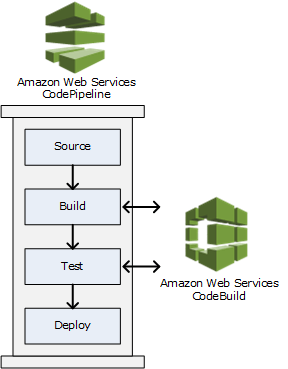

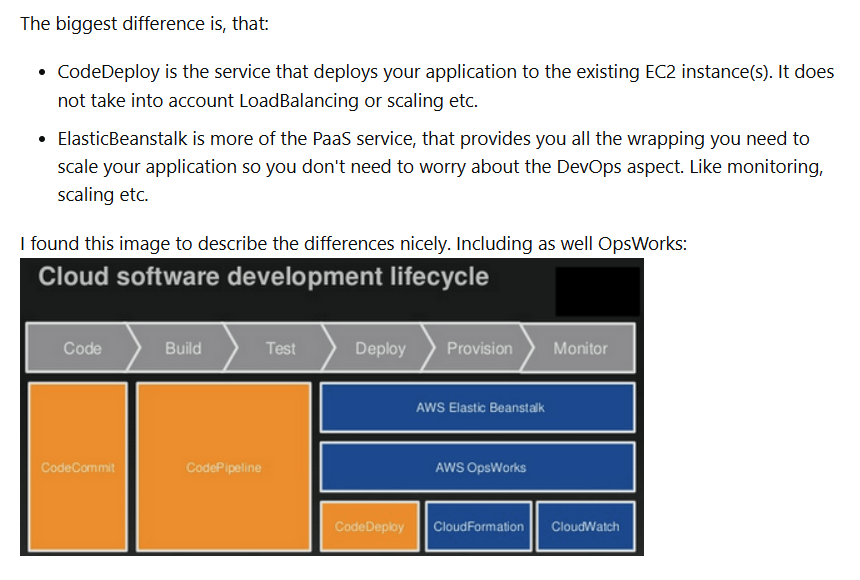

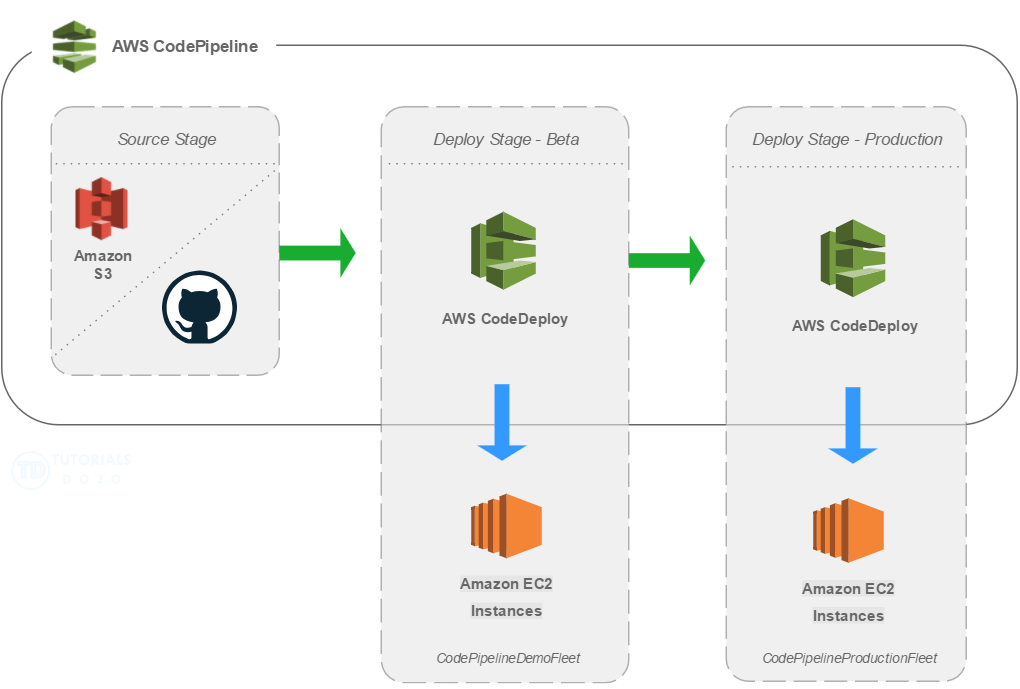

AWS CodePipeline

- [Machine Learning] Automate the entire process of training, testing, and deploying the models

- automating pipeline from code to deployments, as visual workflow

- Source – CodeCommit, ECR, S3, Bitbucket, GitHub

- Build – CodeBuild, Jenkins, CloudBees, TeamCity

- Test – CodeBuild, AWS Device Farm, 3rd party tools…

- Deploy – CodeDeploy, Elastic Beanstalk, CloudFormation, ECS, S3…

- Invoke – Lambda (invokes a Lambda function within a Pipeline), Step Functions (star ts a State Machine within a Pipeline)

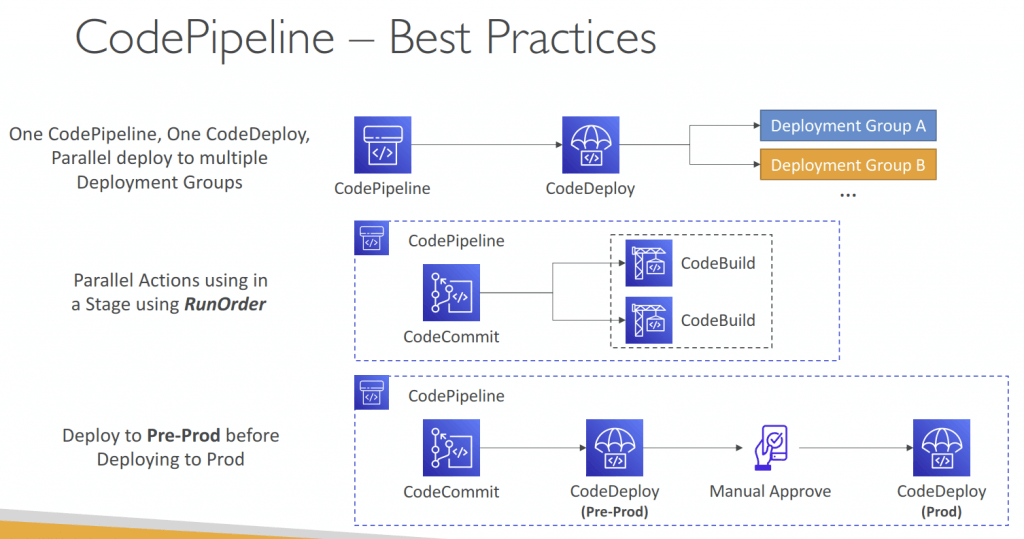

- Consists of stages:

- Each stage can have sequential actions and/or parallel actions

- Manual approval can be defined at any stage

- each stage can create artifacts, stored in S3 bucket

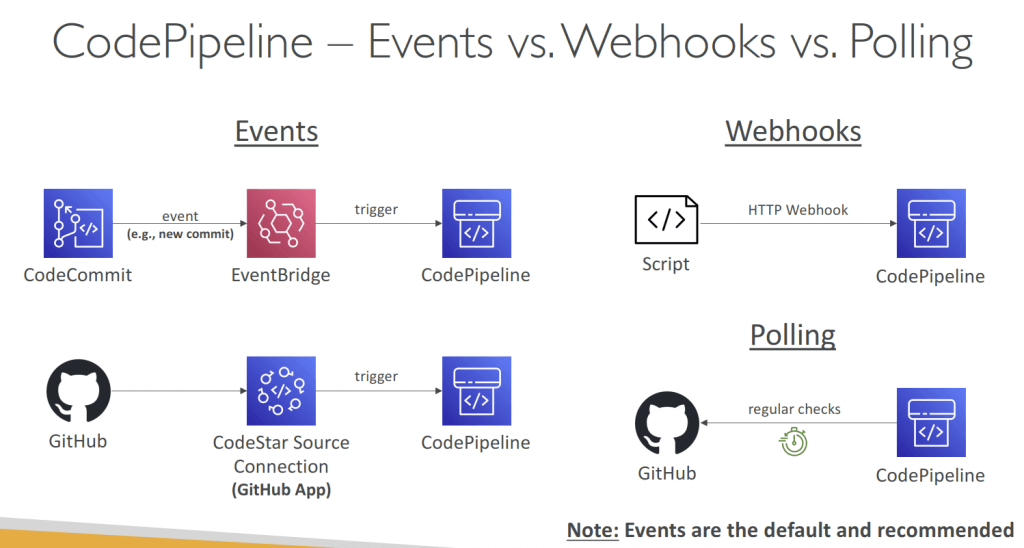

- Use AWS EventBridge for troubleshooting; with CloudTrail for audit AWS API calls

- If pipeline can’t perform an action, make sure the “IAM ServiceRole” attached does have enough IAM permissions (IAM Policy)

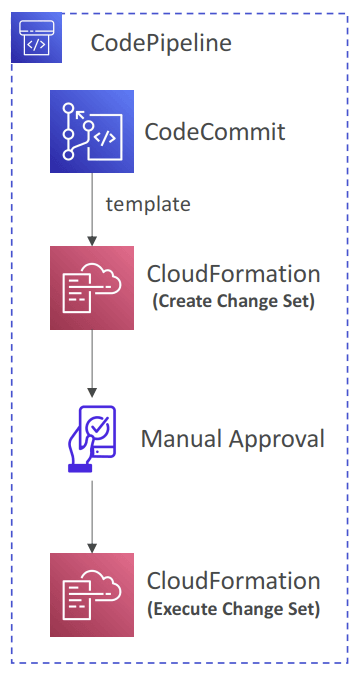

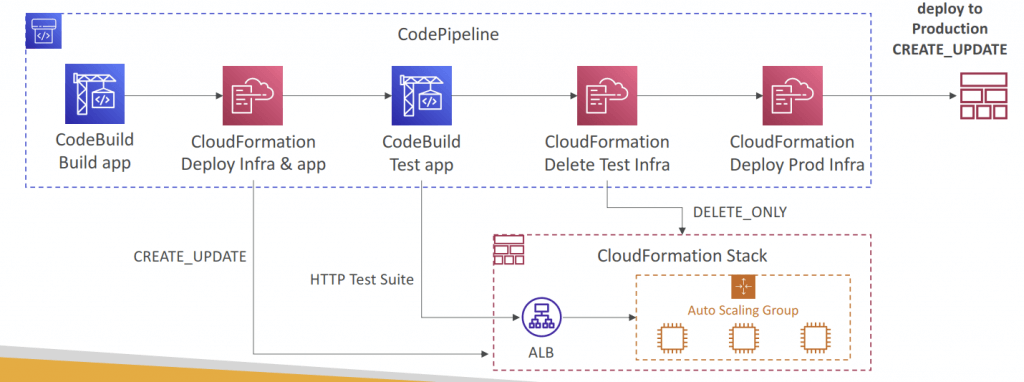

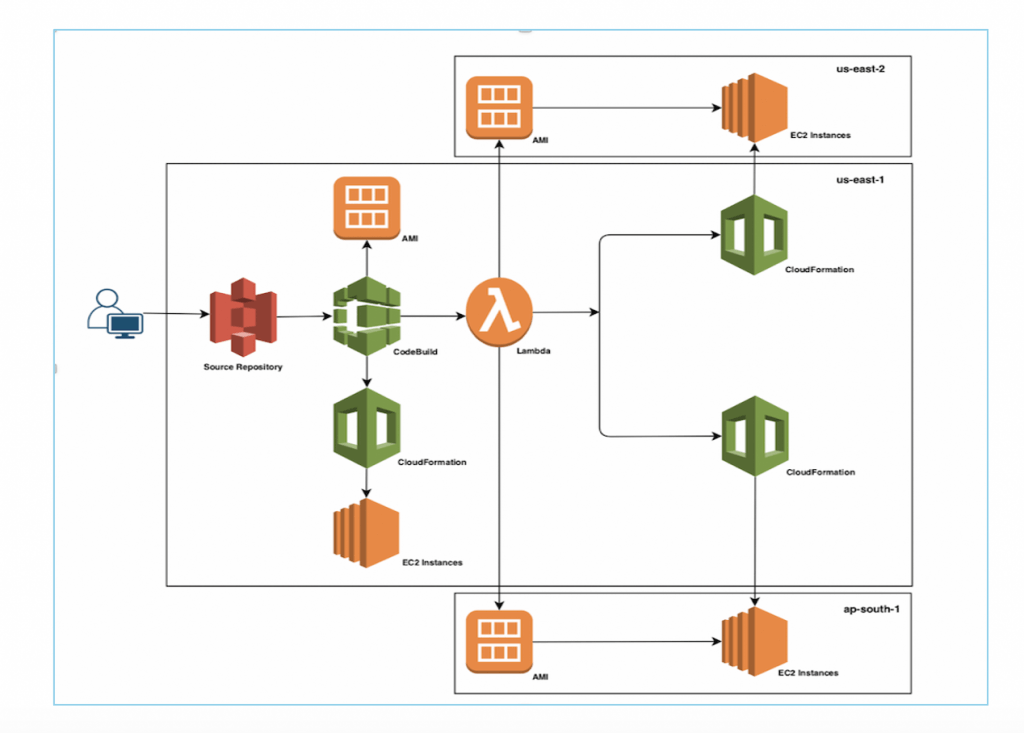

- CloudFormation as a Target

- CloudFormation Deploy Action can be used to deploy AWS resources

- Example: deploy Lambda functions using CDK or SAM (alternative to CodeDeploy)

- Works with CloudFormation StackSets to deploy across multiple AWS accounts and AWS Regions

- Configure different settings:

- Stack name, Change Set name, template, parameters, IAM Role, Action Mode…

- CREATE_UPDATE – create or update an existing stack

- DELETE_ONLY – delete a stack if it exists

- Action Modes

- Create or Replace a Change Set, Execute a Change Set

- Create or Update a Stack, Delete a Stack, Replace a Failed Stack

- Template Parameter Overrides

- Specify a JSON object to override parameter values

- Retrives the parameter value from CodePipeline Input Artifact

- All parameter names must be present in the template

- Static – use template configuration file (recommended)

- Dynamic – use parameter overrides

- Fn::GetArtifactAtt

- Fn::GetParam

- In a CodePipeline stage, you can specify parameter overrides for AWS CloudFormation actions. Parameter overrides let you specify template parameter values that override values in a template configuration file. AWS CloudFormation provides functions to help you specify dynamic values (values that are unknown until the pipeline runs).

- You can set the

Fn::GetArtifactAttfunction which retrieves the value of an attribute from an input artifact, such as the S3 bucket name where the artifact is stored. You can use this function to specify attributes of an artifact, such as its filename or S3 bucket name, that can be used in the pipeline.

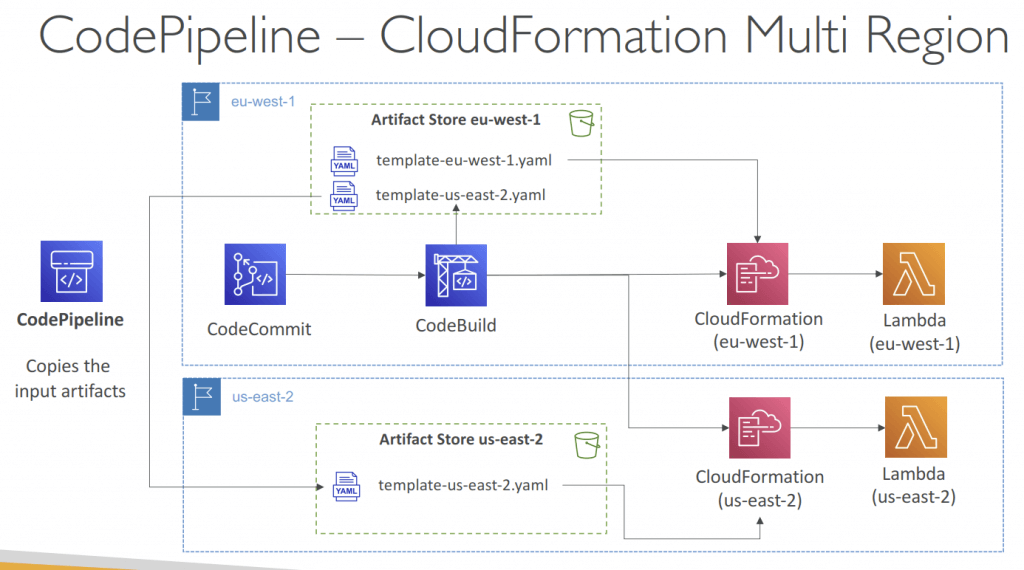

- Multi Region

- Actions in your pipeline can be in different regions

- Example: deploy a Lambda function through CloudFormation into multiple regions

- S3 Artifact Stores must be defined in each region where you have actions

- CodePipeline must have read/write access into every artifact buckets

- If you use the console default artifact buckets are configured, else you must create them

- CodePipeline handles the copying of input artifacts from one AWS Region

to the other Regions when performing cross-region actions- In your cross-region actions, only reference the name of the input artifacts

- You can add actions to your pipeline that are in an AWS Region different from your pipeline. When an AWS service is the provider for an action, and this action type/provider type are in a different AWS Region from your pipeline, this is a cross-region action. Certain action types in CodePipeline may only be available in certain AWS Regions.

- You can use the console, AWS CLI, or AWS CloudFormation to add cross-region actions in pipelines. If you use the console to create a pipeline or cross-region actions, default artifact buckets are configured by CodePipeline in the Regions where you have actions. When you use the AWS CLI, AWS CloudFormation, or an SDK to create a pipeline or cross-region actions, you provide the artifact bucket for each Region where you have actions. You must create the artifact bucket and encryption key in the same AWS Region as the cross-region action and in the same account as your pipeline.

- You cannot create cross-region actions for the following action types: source actions, third-party actions, and custom actions. When a pipeline includes a cross-region action as part of a stage, CodePipeline replicates only the input artifacts of the cross-region action from the pipeline Region to the action’s Region. The pipeline Region and the Region where your CloudWatch Events change detection resources are maintained remain the same. The Region where your pipeline is hosted does not change.

- Actions in your pipeline can be in different regions

| Owner | Type of action | Provider | Valid number of input artifacts | Valid number of output artifacts |

|---|---|---|---|---|

AWS | Source | S3 | 0 | 1 |

AWS | Source | CodeCommit | 0 | 1 |

AWS | Source | ECR | 0 | 1 |

ThirdParty | Source | CodeStarSourceConnection | 0 | 1 |

AWS | Build | CodeBuild | 1 to 5 | 0 to 5 |

AWS | Test | CodeBuild | 1 to 5 | 0 to 5 |

AWS | Test | DeviceFarm | 1 | 0 |

AWS | Approval | ThirdParty | 0 | 0 |

AWS | Deploy | S3 | 1 | 0 |

AWS | Deploy | CloudFormation | 0 to 10 | 0 to 1 |

AWS | Deploy | CodeDeploy | 1 | 0 |

AWS | Deploy | ElasticBeanstalk | 1 | 0 |

AWS | Deploy | OpsWorks | 1 | 0 |

AWS | Deploy | ECS | 1 | 0 |

AWS | Deploy | ServiceCatalog | 1 | 0 |

AWS | Invoke | Lambda | 0 to 5 | 0 to 5 |

ThirdParty | Deploy | AlexaSkillsKit | 1 to 2 | 0 |

Custom | Build | Jenkins | 0 to 5 | 0 to 5 |

Custom | Test | Jenkins | 0 to 5 | 0 to 5 |

Custom | Any supported category | As specified in the custom action | 0 to 5 | 0 to 5 |

- [ 🧐QUESTION🧐 ] autorollback when deploy failed on CodeDeploy

- You can automate your release process by using AWS CodePipeline to test your code and run your builds with CodeBuild. You can create reports in CodeBuild that contain details about tests that are run during builds.

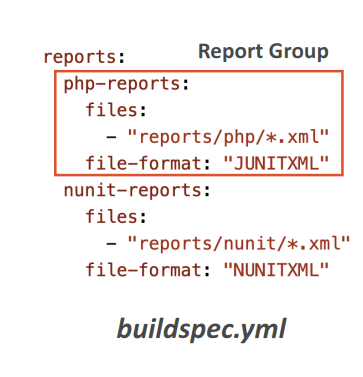

- You can create tests such as unit tests, configuration tests, and functional tests. The test file format can be JUnit XML or Cucumber JSON. Create your test cases with any test framework that can create files in one of those formats (for example, Surefire JUnit plugin, TestNG, and Cucumber). To create a test report, you add a report group name to the buildspec file of a build project with information about your test cases. When you run the build project, the test cases are run and a test report is created. You do not need to create a report group before you run your tests. If you specify a report group name, CodeBuild creates a report group for you when you run your reports. If you want to use a report group that already exists, you specify its ARN in the buildspec file.

- In AWS CodePipeline, you can add an approval action to a stage in a pipeline at the point where you want the pipeline execution to stop so that someone with the required AWS Identity and Access Management permissions can approve or reject the action.

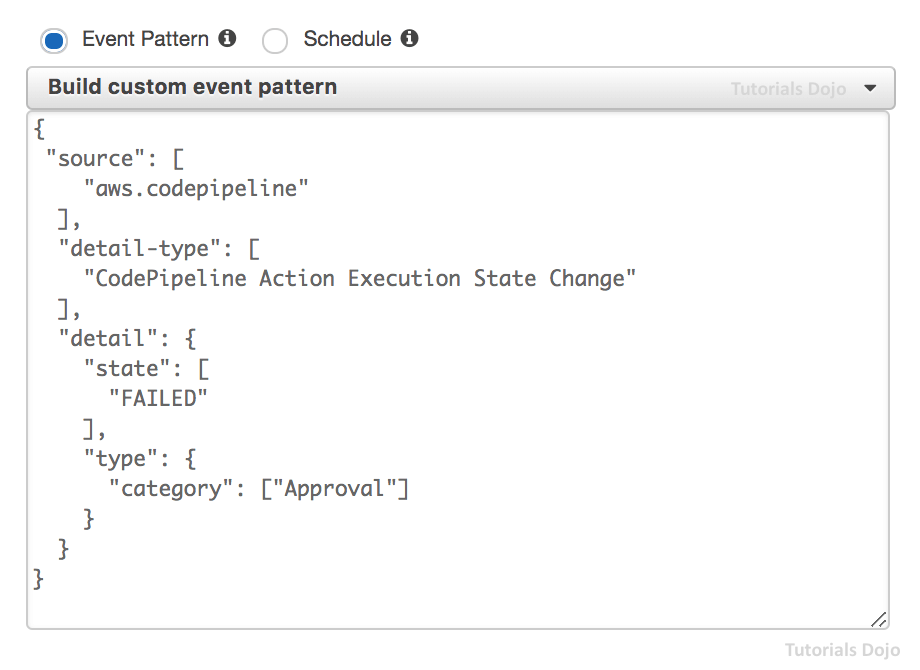

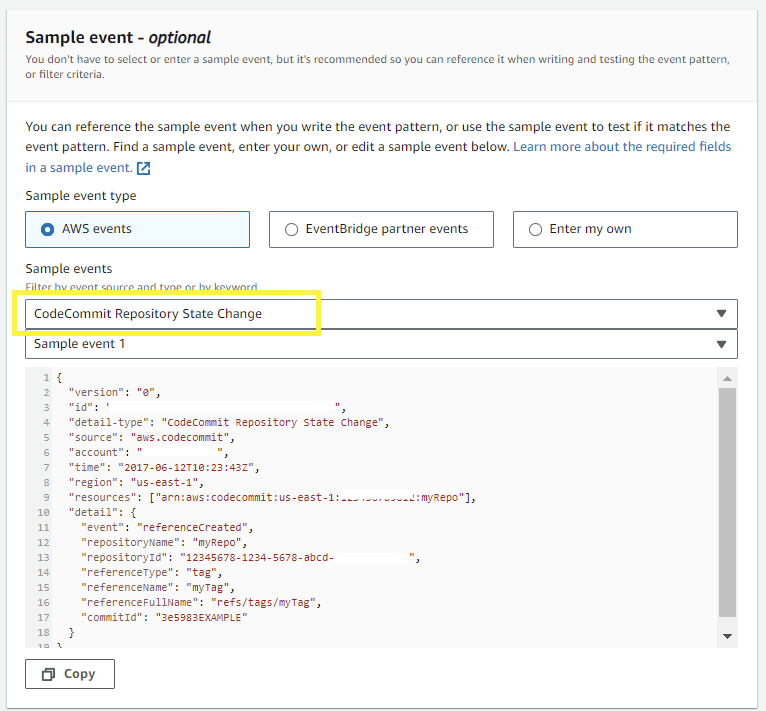

- [ 🧐QUESTION🧐 ] CodePipeline Monitoring

- EventBridge event bus events — You can monitor CodePipeline events in EventBridge, which detects changes in your pipeline, stage, or action execution status. EventBridge routes that data to targets such as AWS Lambda and Amazon Simple Notification Service.

- Notifications for pipeline events in the Developer Tools console — You can monitor CodePipeline events with notifications that you set up in the console and then create an Amazon Simple Notification Service topic and subscription for.

- AWS CloudTrail — Use CloudTrail to capture API calls made by or on behalf of CodePipeline in your AWS account and deliver the log files to an Amazon S3 bucket. You can choose to have CloudWatch publish Amazon SNS notifications when new log files are delivered so you can take quick action.

- Console and CLI — You can use the CodePipeline console and CLI to view details about the status of a pipeline or a particular pipeline execution.

- [ 🧐QUESTION🧐 ] CodePipeline Stage Change Catch

- Depending on the type of state change, you might want to send notifications, capture state information, take corrective action, initiate events, or take other actions.

- You can configure notifications to be sent when the state changes for:

- Specified pipelines or all your pipelines. You control this by using

"detail-type":"CodePipeline Pipeline Execution State Change". - Specified stages or all your stages, within a specified pipeline or all your pipelines. You control this by using

"detail-type":"CodePipeline Stage Execution State Change". - Specified actions or all actions, within a specified stage or all stages, within a specified pipeline or all your pipelines. You control this by using

"detail-type":"CodePipeline Action Execution State Change"

- Specified pipelines or all your pipelines. You control this by using

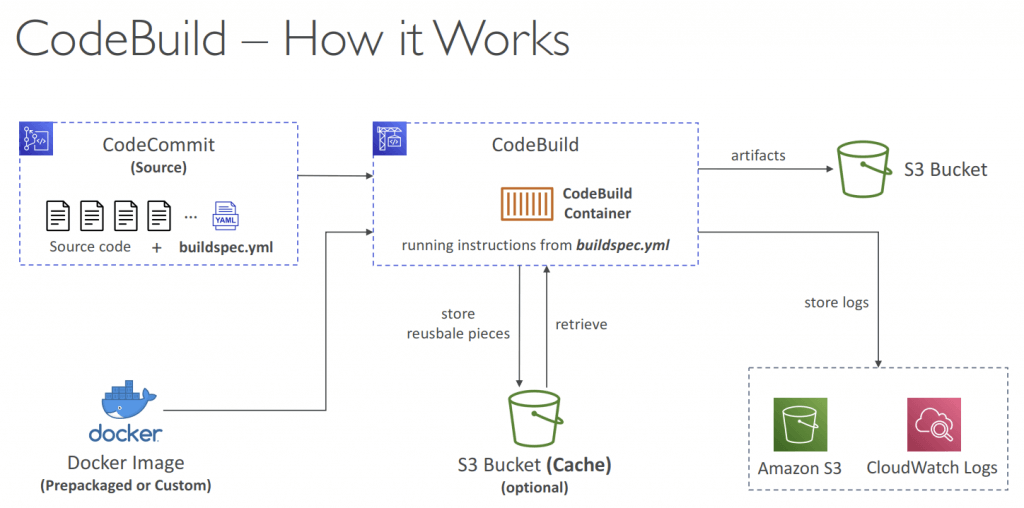

AWS CodeBuild

- A fully managed continuous integration (CI) service

- Compile the code, execute unit tests, and build the necessary artifacts

- Continuous scaling (no servers to manage or provision – no build queue)

- Alternative to other build tools (e.g., Jenkins)

- Charged per minute for compute resources (time it takes to complete the builds)

- Leverages Docker under the hood for reproducible builds

- Use prepackaged Docker images or create your own custom Docker image

- Security:

- Integration with KMS for encryption of build artifacts

- IAM for CodeBuild permissions, and VPC for network security

- AWS CloudTrail for API calls logging

- CodeBuild Service Role allows CodeBuild to access AWS resources on your behalf (assign the required permissions)

- Use cases:

- Download code from CodeCommit repositor y

- Fetch parameters from SSM Parameter Store

- Upload build ar tifacts to S3 bucket

- Fetch secrets from Secrets Manager

- Store logs in CloudWatch Logs

- A service role is an AWS Identity and Access Management (IAM) that grants permissions to an AWS service so that the service can access AWS resources. You need an AWS CodeBuild service role so that CodeBuild can interact with dependent AWS services on your behalf.

- Use cases:

- In-transit and at-rest data encryption (cache, logs…)

- Source – CodeCommit, S3, Bitbucket, GitHub

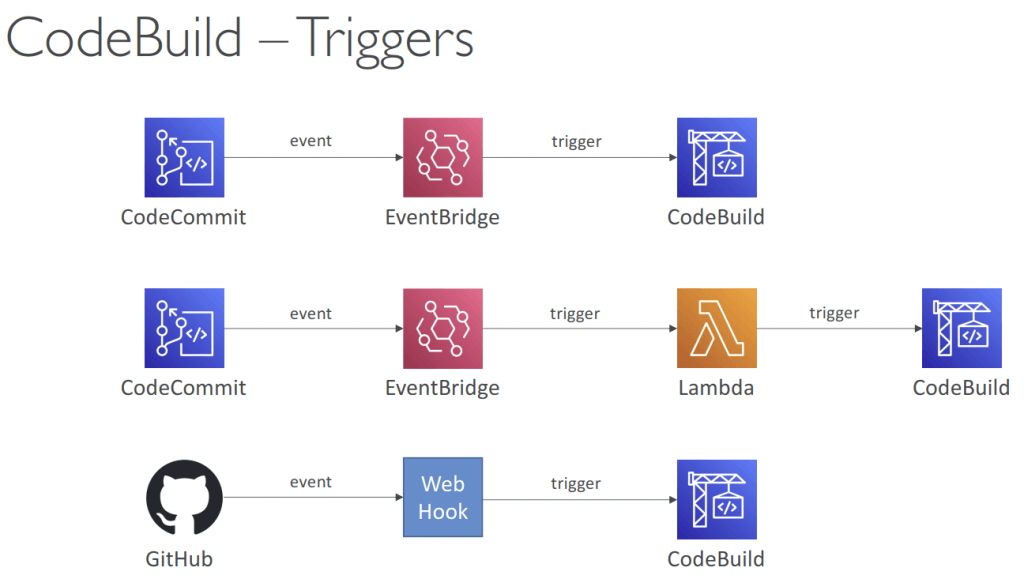

- Set up a GitHub webhook that will initiate a build process whenever there is a modification made to the code, and it is pushed to the repository

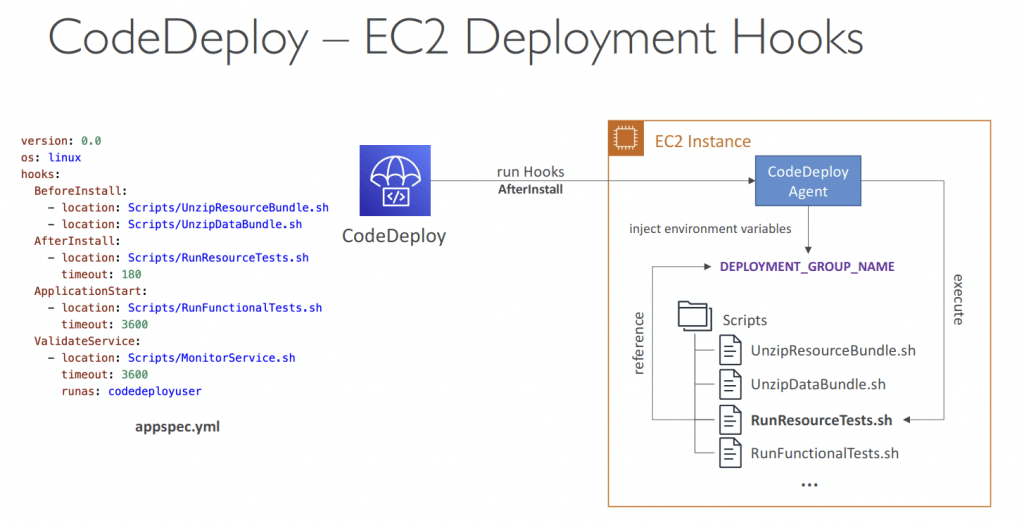

- Build instructions: on buildspec.yml, stored at the root of codes; or insert manually in Console

- env

- variables – plaintext variables

- parameter-store – variables stored in SSM Parameter Store

- secrets-manager – variables stored in AWS Secrets Manager

- phases

- install – install dependencies you may need for your build

- pre_build – final commands to execute before build

- Build – actual build commands

- post_build – finishing touches (e.g., zip output)

- artifacts – what to upload to S3 (encrypted with KMS)

- cache – files to cache (usually dependencies) to S3 for future build speedup

- env

- Output logs can be stored in Amazon S3 & CloudWatch Logs

- Use CloudWatch Metrics to monitor build statistics

- Use EventBridge to detect failed builds and trigger notifications

- Use CloudWatch Alarms to notify if you need “thresholds” for failures

- Build Projects can be defined within CodePipeline or CodeBuild

- You can run CodeBuild locally on your desktop (after installing Docker)

- Inside VPC

- By default, your CodeBuild containers are launched outside your VPC

- It cannot access resources in a VPC

- You can specify a VPC configuration:

- VPC ID

- Subnet IDs

- Security Group IDs

- Then your build can access resources in your VPC (e.g., RDS, ElastiCache, EC2, ALB…)

- Use cases: integration tests, data query, internal load balancers…

- By default, your CodeBuild containers are launched outside your VPC

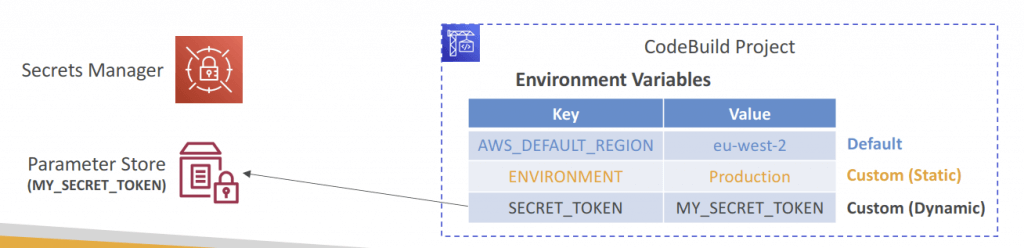

- Environment Variables

- Default Environment Variables

- Defined and provided by AWS

- AWS_DEFAULT_REGION, CODEBUILD_BUILD_ARN, CODEBUILD_BUILD_ID, CODEBUILD_BUILD_IMAGE…

- Custom Environment Variables

- Static – defined at build time (override using start-build API call)

- Dynamic – using SSM Parameter Store and Secrets Manager

- Default Environment Variables

- Build Badges

- Suppor ted for CodeCommit, GitHub, and BitBucket

- Note: Badges are available at the branch level

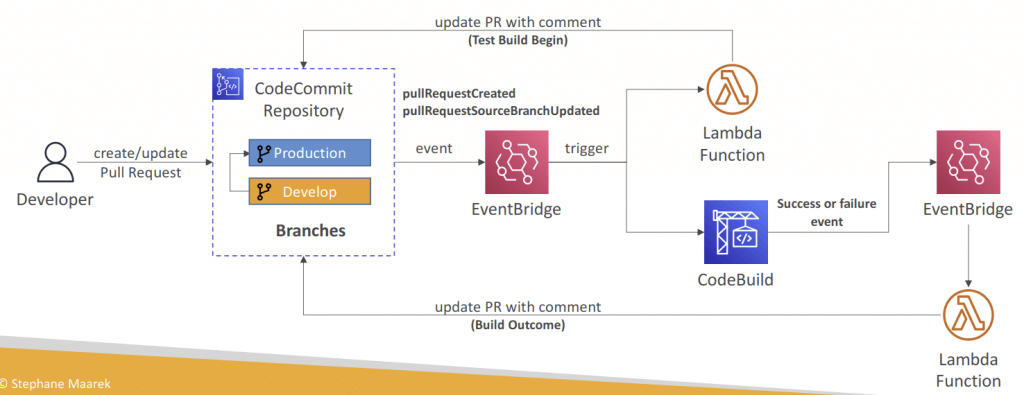

- Validate Pull Requests

- Validate proposed code changes in PRs before they get merged

- Ensure high level of code quality and avoid code conflicts

- Test Reports

- Contains details about tests that are run during builds

- Unit tests, configuration tests, functional tests

- Create your test cases with any test framework that can create repor t files in the following format:

- JUnit XML, NUnit XML, NUnit3 XML

- Cucumber JSON, TestNG XML, Visual Studio TRX

- Create a test report and add a Report Group name in buildspec.yml file with information about your tests

AWS CodeDeploy

- [Machine Learning] Automatically release new versions of the models to various environments while avoiding downtime and handling the complexity of updating them

- Deploy new applications versions

- EC2 Instances, On-premises servers

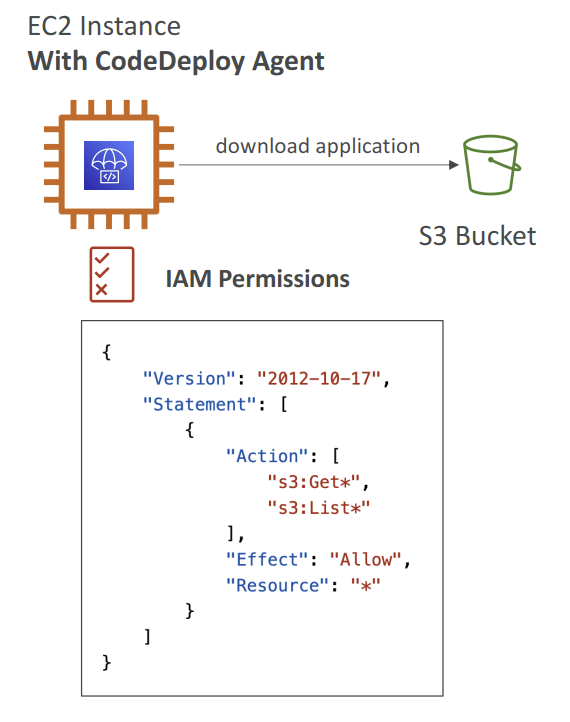

- need CodeDeployAgent on the target instance, with S3 access permit

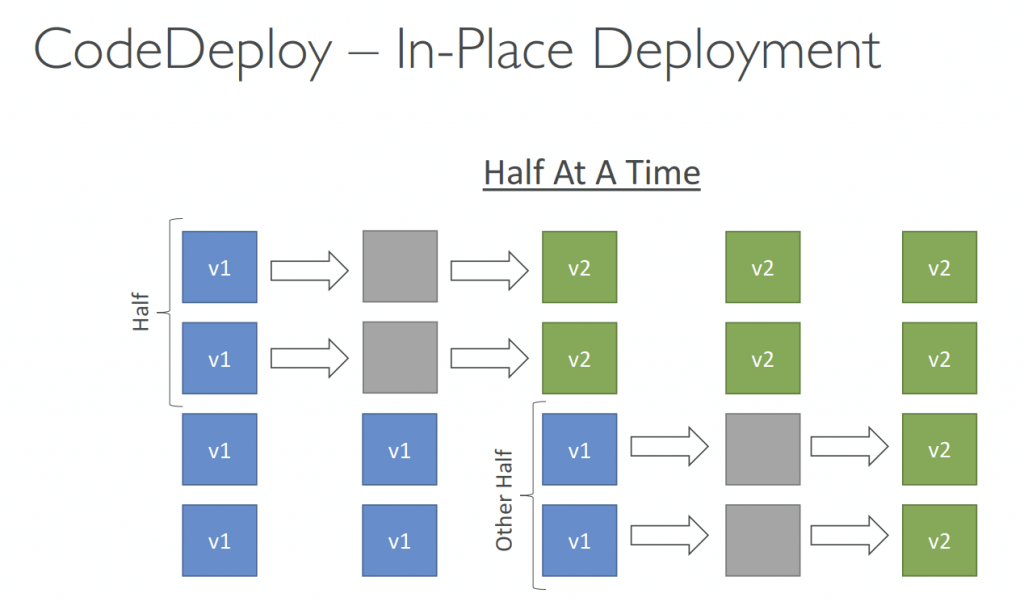

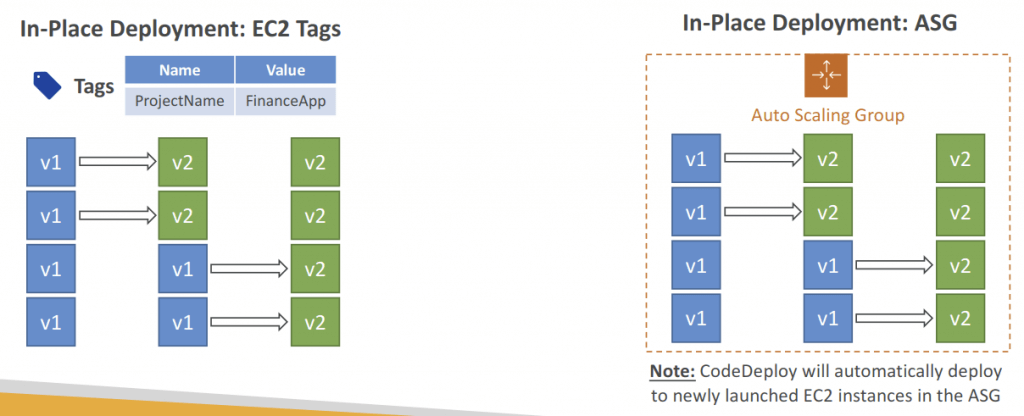

- In-place Deployment (compatible with existing ASG)

- AllAtOnce: most downtime

- HalfAtATime: reduced capacity by 50%

- OneAtATime: slowest, lowest availability impact (health-check on every new instance deployed)

- Custom: define your %

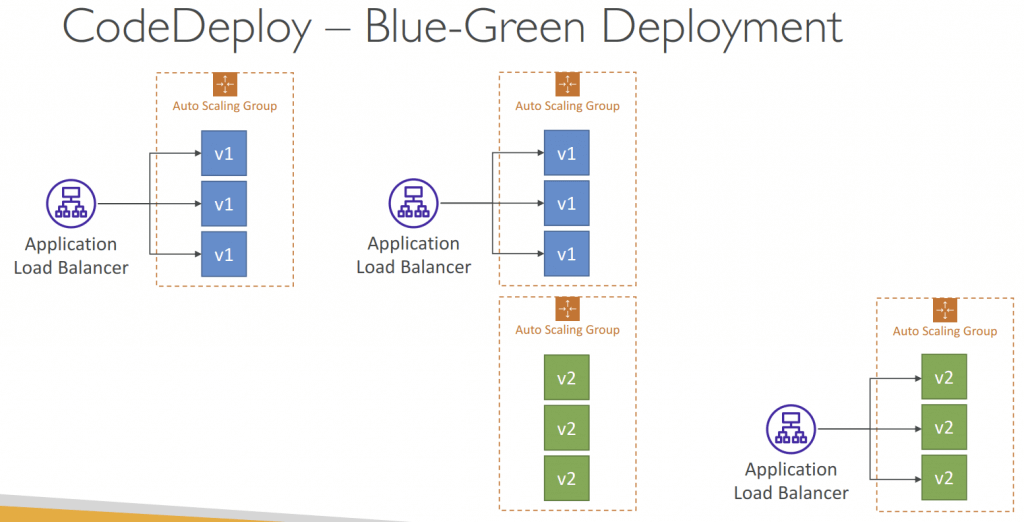

- or Blue/Green Deployment (new ASG created) (also as one deployment attempt)

- must be using an ELB

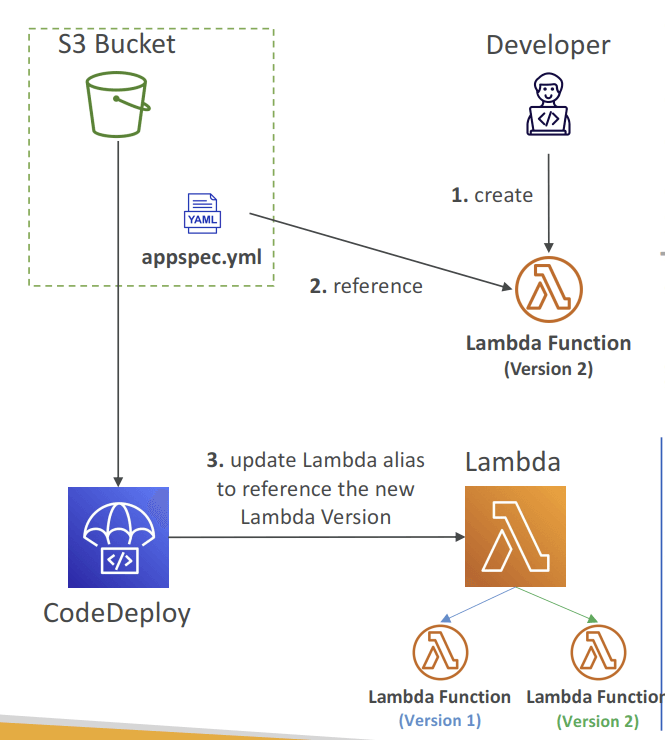

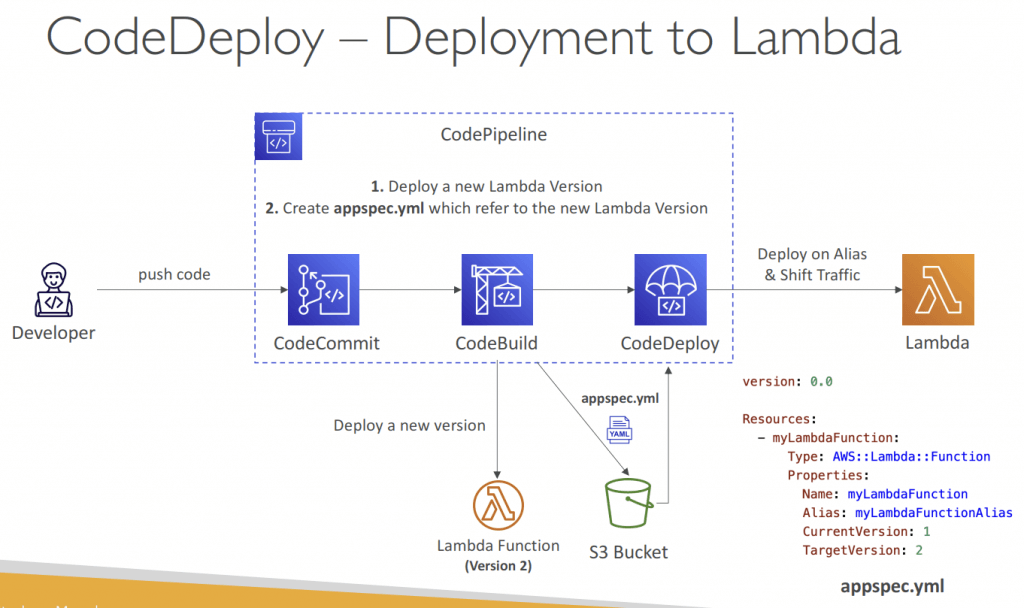

- Lambda functions (integrated into SAM)

- create a new Lambda function Version; ie, only Blue-Green Deployment supported

- Specify the Version info in the appspec.yml file

- Name (required) – the name of the Lambda function to deploy

- Alias (required) – the name of the alias to the Lambda function

- CurrentVersion (required) – the version of the Lambda function traffic currently points to

- TargetVersion (required) – the version of the Lambda function traffic is shifted to

- Specify the Version info in the appspec.yml file

- traffic would be redirected by

- Linear: grow traffic every N minutes until 100%

- LambdaLinear10PercentEvery3Minutes

- LambdaLinear10PercentEvery10Minutes

- Canary (two deployment attempts only): try X percent then 100%

- LambdaCanary10Percent5Minutes

- LambdaCanary10Percent30Minutes

- AllAtOnce (one deployment attempt): immediate

- Linear: grow traffic every N minutes until 100%

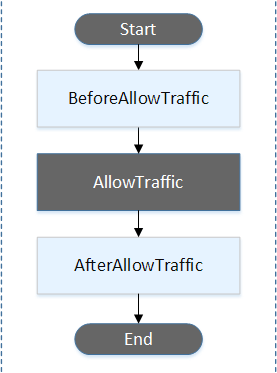

- For Lambda Deployment, the hook would be “a Lambda function”

- BeforeAllowTraffic and AfterAllowTraffic hooks can be used to check the health of the Lambda function

- create a new Lambda function Version; ie, only Blue-Green Deployment supported

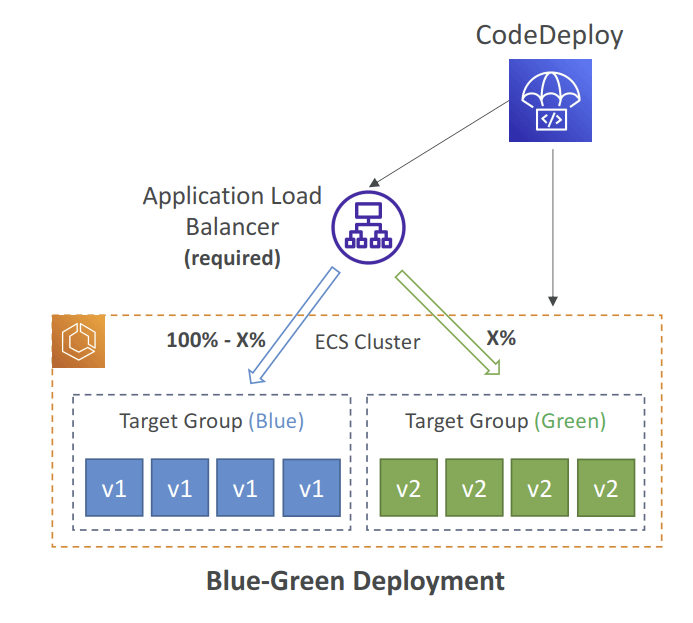

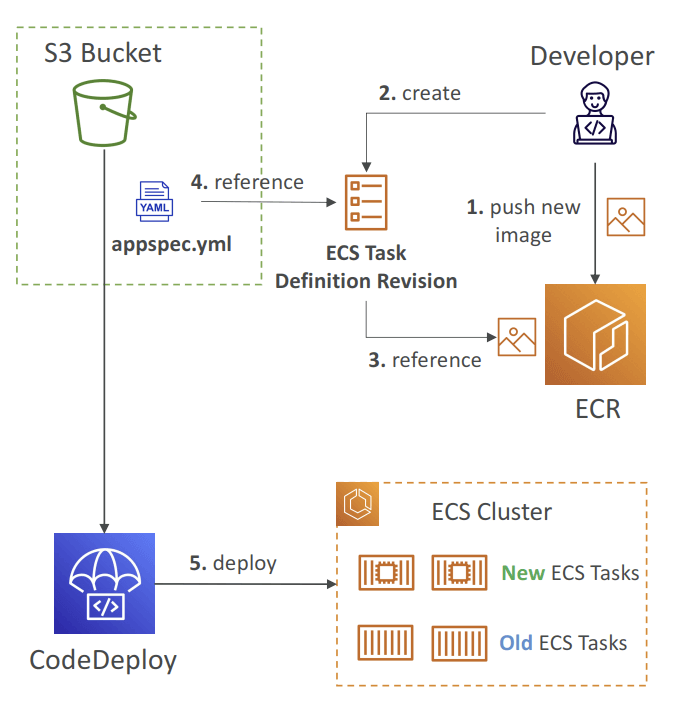

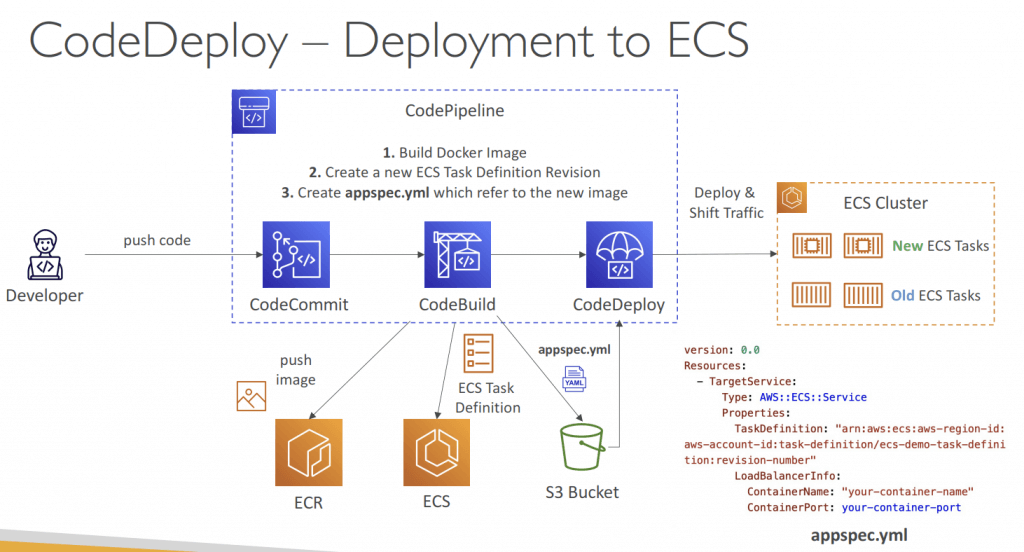

- ECS Services

- with only Blue/Green deployment

- needs Application Load Balancer (ALB) to control traffic

- Linear: grow traffic every N minutes until 100%

- Canary (two deployment attempts only): try X percent then 100%

- LambdaCanary10Percent5Minutes

- LambdaCanary10Percent30Minutes

- AllAtOnce (one deployment attempt): immediate

- not Elastic Beanstalk

- The ECS Task Definition and new Container Images must be already created

- A reference to the new ECS Task Revision (TaskDefinition) and load balancer

- information (LoadBalancerInfo) are specified in the appspec.yml file

- Can define a second ELB Test Listener to test the Replacement (Green) version before traffic is rebalanced

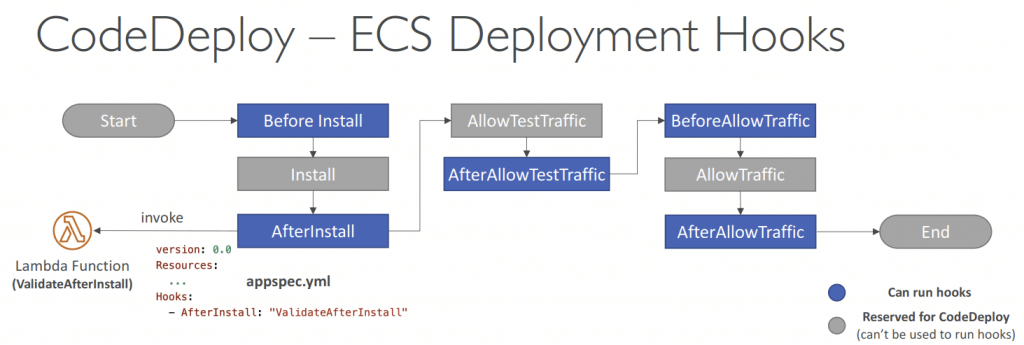

- For ECS Deployment, the hook would be “a Lambda function”

- For example, AfterAllowTestTraffic – run AWS Lambda function after the test ELB Listener serves traffic to the Replacement ECS Task Set

- EC2 Instances, On-premises servers

- A deployment group is the AWS CodeDeploy entity for grouping EC2 instances or AWS Lambda functions in a CodeDeploy deployment. For EC2 deployments, it is a set of instances associated with an application that you target for a deployment.

- Use appspec.yml to define actions (manage each application deployment as a series of lifecycle event hooks in CodeDeploy)

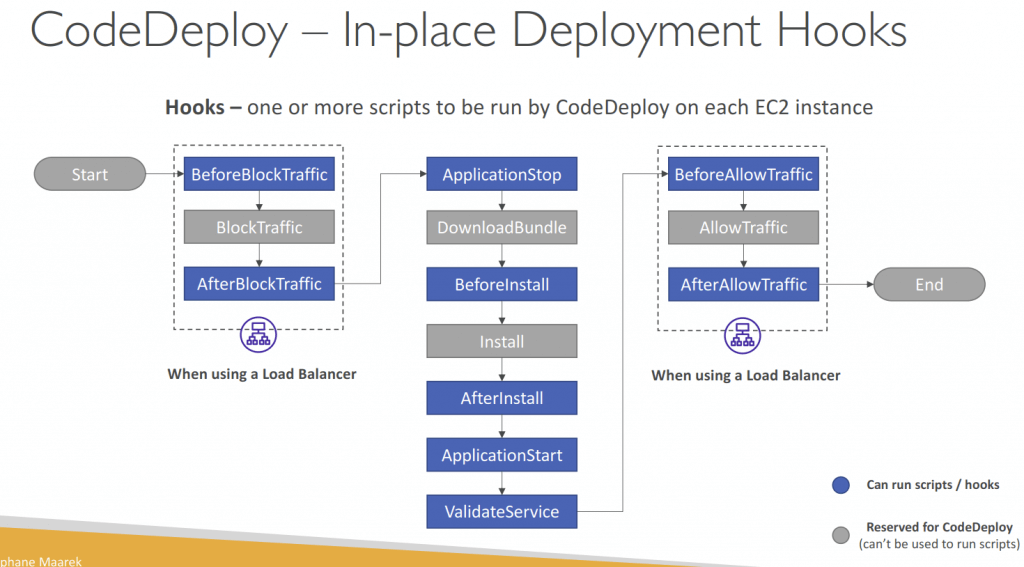

- For “In-place Deployments”

- Use EC2 Tags or ASG to identify instances you want to deploy to

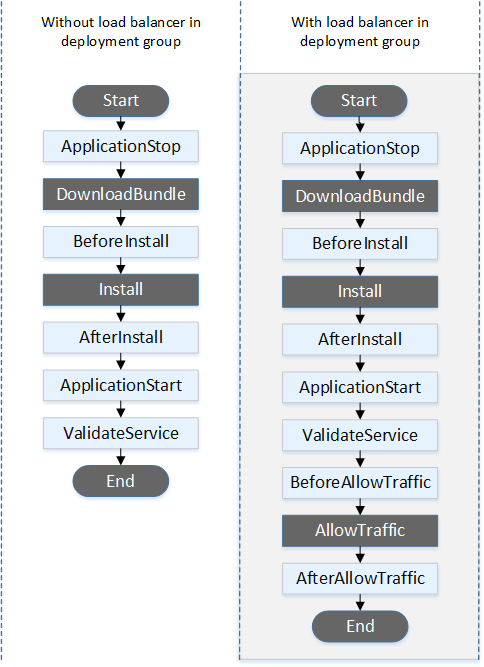

- With a Load Balancer: traffic is stopped before instance is updated, and started again after the instance is updated

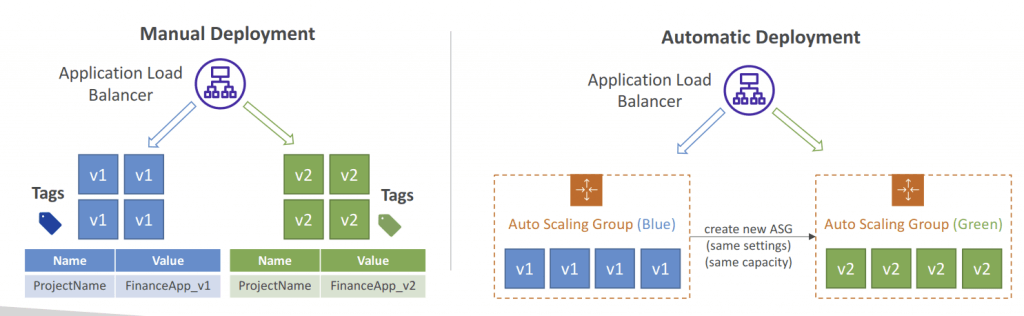

- For “Blue/Green Deployments”

- Manually mode: provision EC2 Instances for Blue and Green and identify by Tags

- Automatic mode: new ASG is provisioned by CodeDeploy (settings are copied)

- Using a Load Balancer is necessary for Blue/Green

- BlueInstanceTerminationOption – specify whether to terminate the original (Blue) EC2 instances after a successfully Blue-Green deployment

- Action Terminate – Specify Wait Time, default 1 hour, max 2 days

- terminationWaitTimeInMinutes: The number of minutes to wait after a successful blue/green deployment before terminating instances from the original environment.

- Action Keep_Alive – Instances are kept running but deregistered from ELB and deployment group

- Action Terminate – Specify Wait Time, default 1 hour, max 2 days

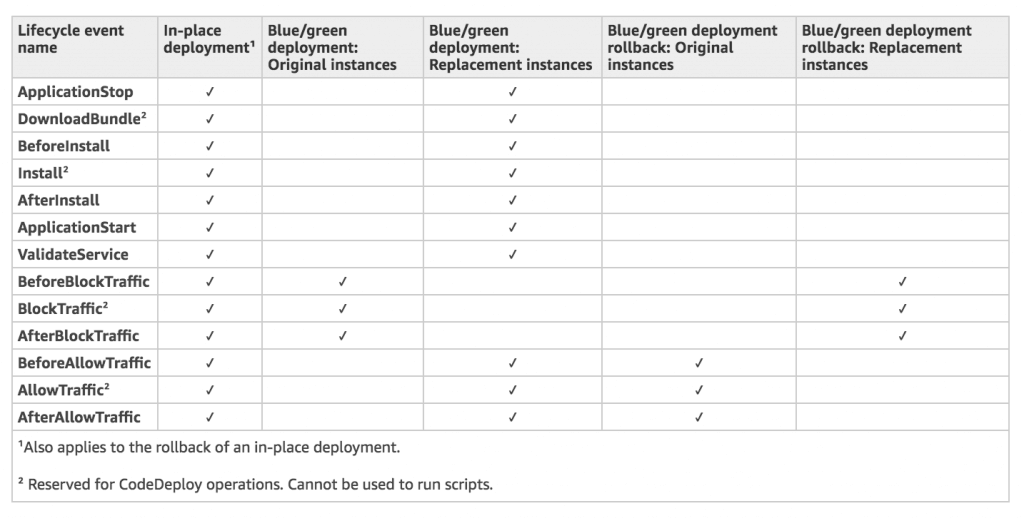

- Deployment Hooks Examples

- BeforeInstall – used for preinstall tasks, such as decrypting files and creating a backup of the current version

- AfterInstall – used for tasks such as configuring your application or changing file permissions

- ApplicationStart – used to start services that stopped during ApplicationStop

- ValidateService – used to verify the deployment was completed successfully

- BeforeAllowTraffic – run tasks on EC2 instances before registered to the load balancer

- Example: perform health checks on the application and fail the deployment if the health

checks are not successful

- Example: perform health checks on the application and fail the deployment if the health

- BlockTraffic – reserved for the CodeDeploy agent and cannot be used to run custom scripts

- Deployment Configurations

- Specifies the number of instances that must remain available at any time during the deployment

- Use Pre-defined Deployment Configurations

- CodeDeployDefault.AllAtOnce – deploy to as many instances as possible at once

- CodeDeployDefault.HalfAtATime – deploy to up to half of the instances at a time

- CodeDeployDefault.OneAtATime – deploy to only one instance at a time

- Can create your own custom Deployment Configurations

- Triggers: Publish Deployment or EC2 instance events to SNS topic

- Examples: DeploymentSuccess, DeploymentFailure, InstanceFailure

- Rollback = redeploy a previously the last known good revision as a new deployment (with new deployment ID), not a restored version

- Deployments can be rolled back:

- Automatically – rollback when a deployment fails or rollback when a CloudWatch Alarm thresholds are met

- Manually

- Disable Rollbacks — do not perform rollbacks for this deployment

- Deployments can be rolled back:

- TroubleShooting

- CASE ONE – Deployment Error: “InvalidSignatureException – Signature expired: [time] is now earlier than [time]”

- For CodeDeploy to perform its operations, it requires accurate time references

- If the date and time on your EC2 instance are not set correctly, they might not match the signature date of your deployment request, which CodeDeploy rejects

- CASE TWO – When the Deployment or all Lifecycle Events are skipped (EC2/On-Premises), you get one of the following errors:

- “The overall deployment failed because too many individual instances failed deployment”

- “Too few healthy instances are available for deployment”

- “Some instances in your deployment group are experiencing problems. (Error code: HEALTH_CONSTRAINTS)”

- Reasons:

- CodeDeploy Agent might not be installed, running, or it can’t reach CodeDeploy

- CodeDeploy Service Role or IAM instance profile might not have the required permissions

- You’re using an HTTP Proxy, configure CodeDeploy Agent with :proxy_uri: parameter

- Date and Time mismatch between CodeDeploy and Agent

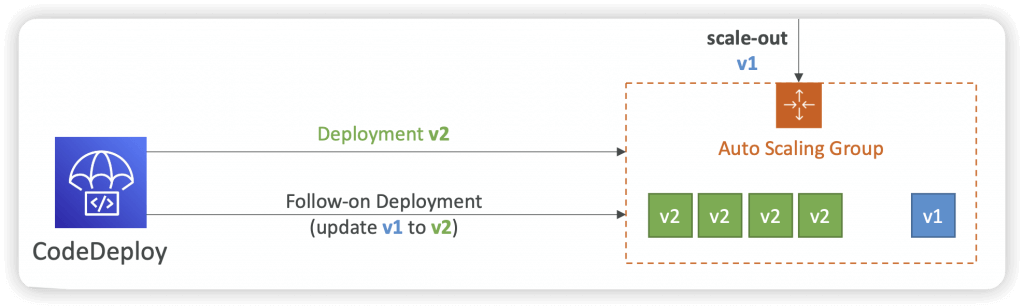

- CASE THREE – If a CodeDeploy deployment to ASG is underway and a scale-out event occurs, the new instances will be updated with the application revision that was most recently deployed (not the application revision that is currently being deployed)

- ASG will have EC2 instances hosting different versions of the application

- By default, CodeDeploy automatically starts a follow-on deployment to update any outdated EC2 instances

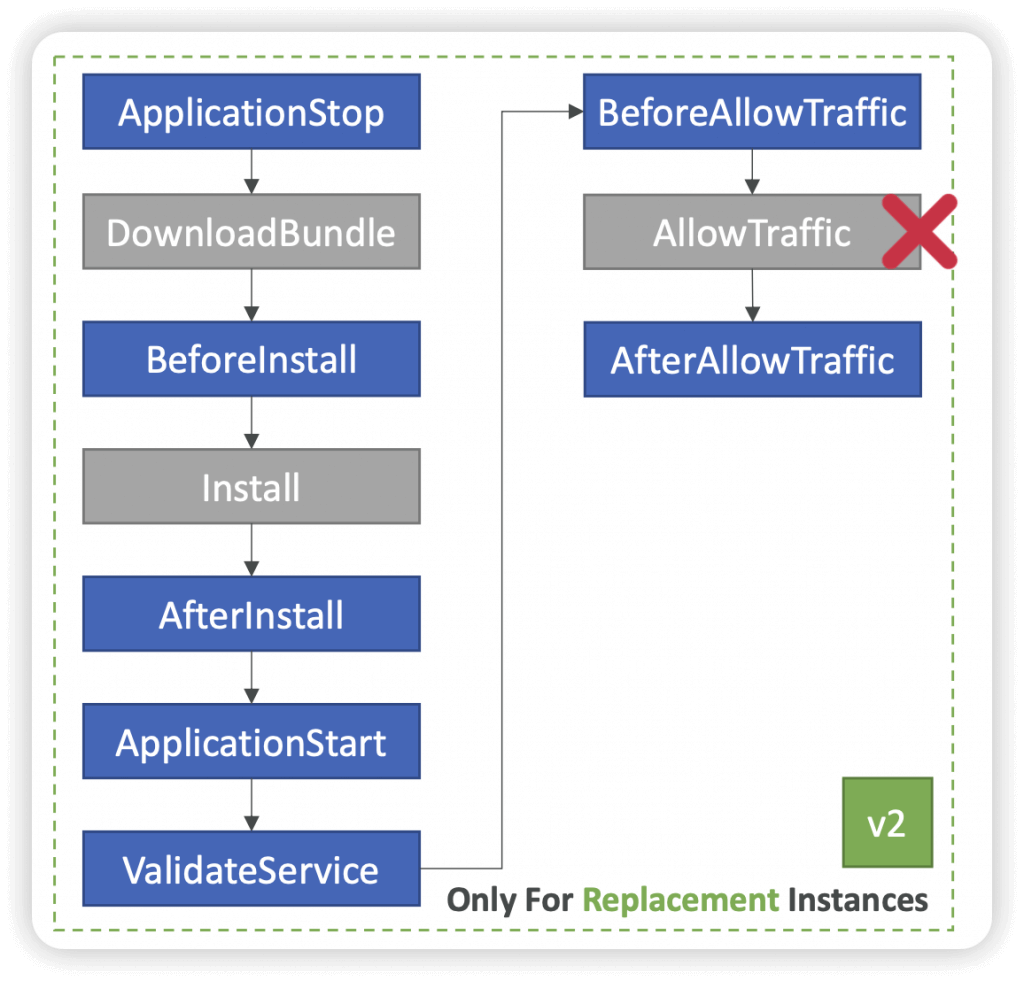

- CASE FOUR – failed AllowTraffic lifecycle event in Blue/Green Deployments with no error reported in the Deployment Logs

- Reason: incorrectly configured health checks in ELB

- Resolution: review and correct any errors in ELB health checks configuration

- CASE ONE – Deployment Error: “InvalidSignatureException – Signature expired: [time] is now earlier than [time]”

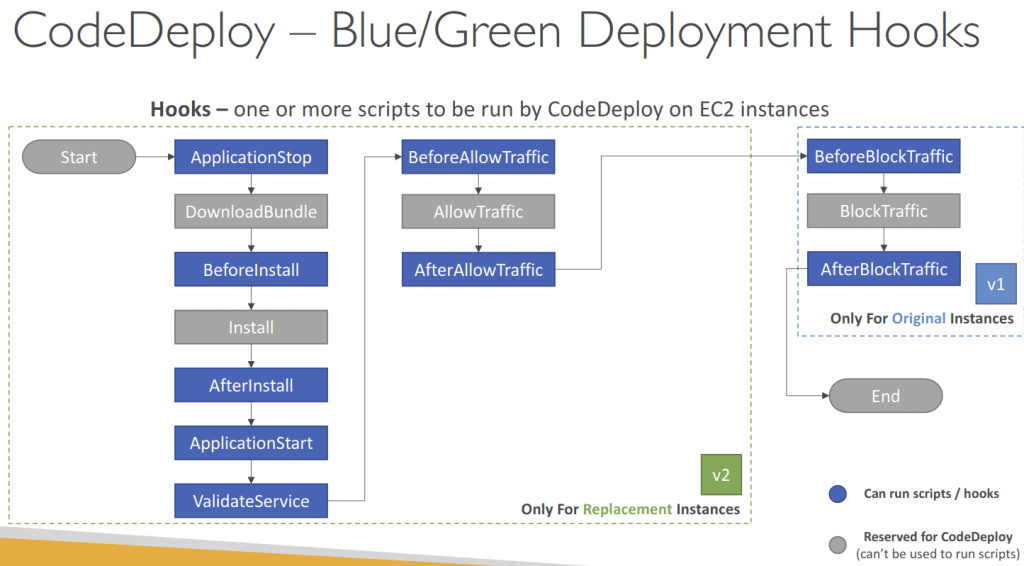

- Deployment Hooks are different

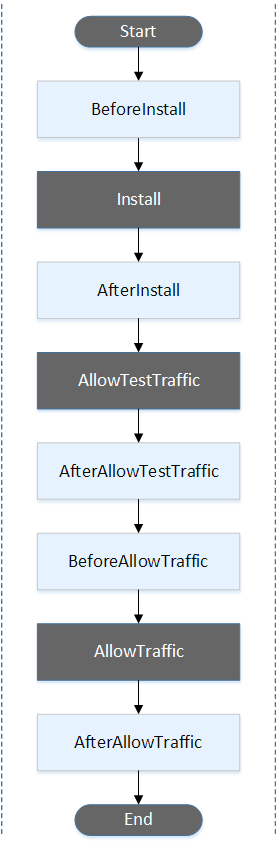

- Amazon ECS deployment

- An AWS Lambda hook is one Lambda function specified with a string on a new line after the name of the lifecycle event. Each hook is executed once per deployment.

- BeforeInstall

- AfterInstall

- AfterAllowTestTraffic

- BeforeAllowTraffic

- in the BeforeAllowTraffic lifecycle hook, validation has already succeeded, which only defeats its purpose. You have to use this hook to perform additional actions before allowing production traffic to flow to the new task version.

- AfterAllowTraffic

- An AWS Lambda hook is one Lambda function specified with a string on a new line after the name of the lifecycle event. Each hook is executed once per deployment.

- AWS Lambda deployment

- BeforeAllowTraffic

- AfterAllowTraffic

- EC2/On-Premises deployment

- An EC2/On-Premises deployment hook is executed once per deployment to an instance. You can specify one or more scripts to run in a hook. Each hook for a lifecycle event is specified with a string on a separate line. Here are descriptions of the hooks available for use in your AppSpec file.

- ApplicationStop

- DownloadBundle

- BeforeInstall

- Install

- AfterInstall

- ApplicationStart

- ValidateService

- BeforeBlockTraffic

- BlockTraffic

- AfterBlockTraffic

- BeforeAllowTraffic

- AllowTraffic

- AfterAllowTraffic

- An EC2/On-Premises deployment hook is executed once per deployment to an instance. You can specify one or more scripts to run in a hook. Each hook for a lifecycle event is specified with a string on a separate line. Here are descriptions of the hooks available for use in your AppSpec file.

- Amazon ECS deployment

- Monitor CodeDeploy deployments using the following CloudWatch tools: Amazon EventBridge, CloudWatch alarms, and Amazon CloudWatch Logs

- use Amazon EventBridge to detect and react to changes in the state of an instance or a deployment (an “event”) in your CodeDeploy operations. Then, based on the rules you create, EventBridge will invoke one or more target actions when a deployment or instance enters the state you specify in a rule. Depending on the type of state change, you might want to send notifications, capture state information, take corrective action, initiate events, or take other actions.

- targets when using EventBridge as part of your CodeDeploy operations:

- AWS Lambda functions

- Kinesis streams

- Amazon SQS queues

- Built-in targets (CloudWatch alarm actions)

- Amazon SNS topics

- use cases

- Use a Lambda function to pass a notification to a Slack channel whenever deployments fail.

- Push data about deployments or instances to a Kinesis stream to support comprehensive, real-time status monitoring.

- Use CloudWatch alarm actions to automatically stop, terminate, reboot, or recover Amazon EC2 instances when a deployment or instance event you specify occurs.

- targets when using EventBridge as part of your CodeDeploy operations:

- use Amazon EventBridge to detect and react to changes in the state of an instance or a deployment (an “event”) in your CodeDeploy operations. Then, based on the rules you create, EventBridge will invoke one or more target actions when a deployment or instance enters the state you specify in a rule. Depending on the type of state change, you might want to send notifications, capture state information, take corrective action, initiate events, or take other actions.

- [ 🧐QUESTION🧐 ] autorollback when deploy failed on CodeDeploy

- Set up an Amazon EventBridge rule to monitor AWS CodeDeploy operations with a Lambda function as a target. Configure the lambda function to send out a message to the DevOps Team’s Slack Channel in the event that the deployment fails. Configure AWS CodeDeploy to use the

Roll back when a deployment failssetting - CloudWatch Alarm can’t directly send a message to a Slack Channel. You have to use an EventBridge with an associated Lambda function to notify the DevOps Team via Slack.

- Set up an Amazon EventBridge rule to monitor AWS CodeDeploy operations with a Lambda function as a target. Configure the lambda function to send out a message to the DevOps Team’s Slack Channel in the event that the deployment fails. Configure AWS CodeDeploy to use the

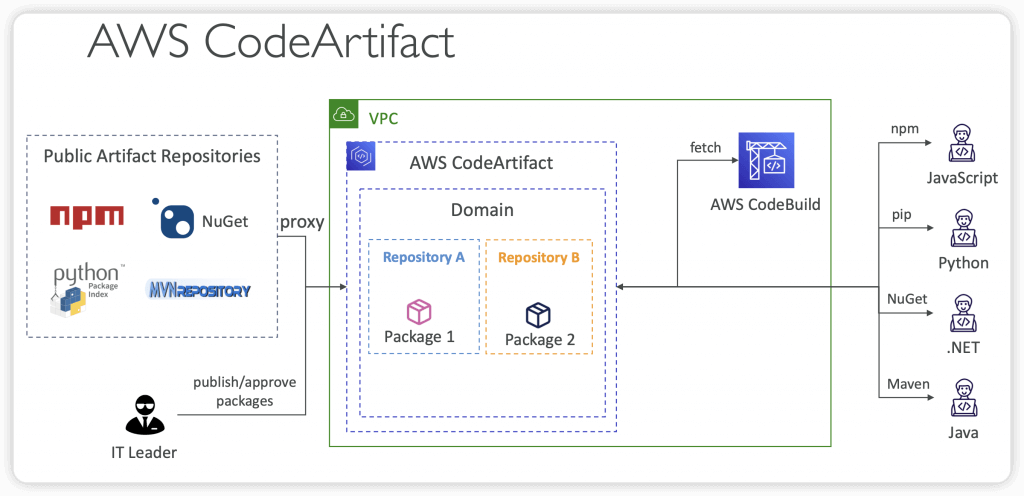

AWS CodeArtifact

- store, publish, and share software packages (aka code dependencies)

- Software packages depend on each other to be built (also called code dependencies), and new ones are created

- Storing and retrieving these dependencies is called artifact management

- Works with common dependency management tools such as Maven, Gradle, npm, yarn, twine, pip, and NuGet

- Developers and CodeBuild can then retrieve dependencies straight from CodeArtifact

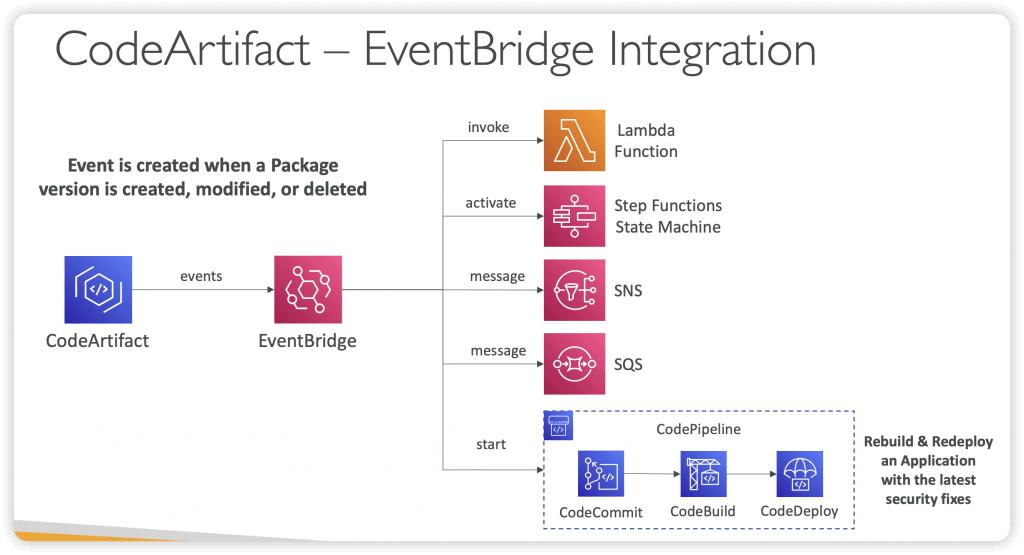

- CodeArtifact seamlessly integrates with Amazon EventBridge, a service that automates actions responding to specific events, including any activity within a CodeArtifact repository. This integration allows you to establish rules that dictate the actions to be taken when a particular event occurs.

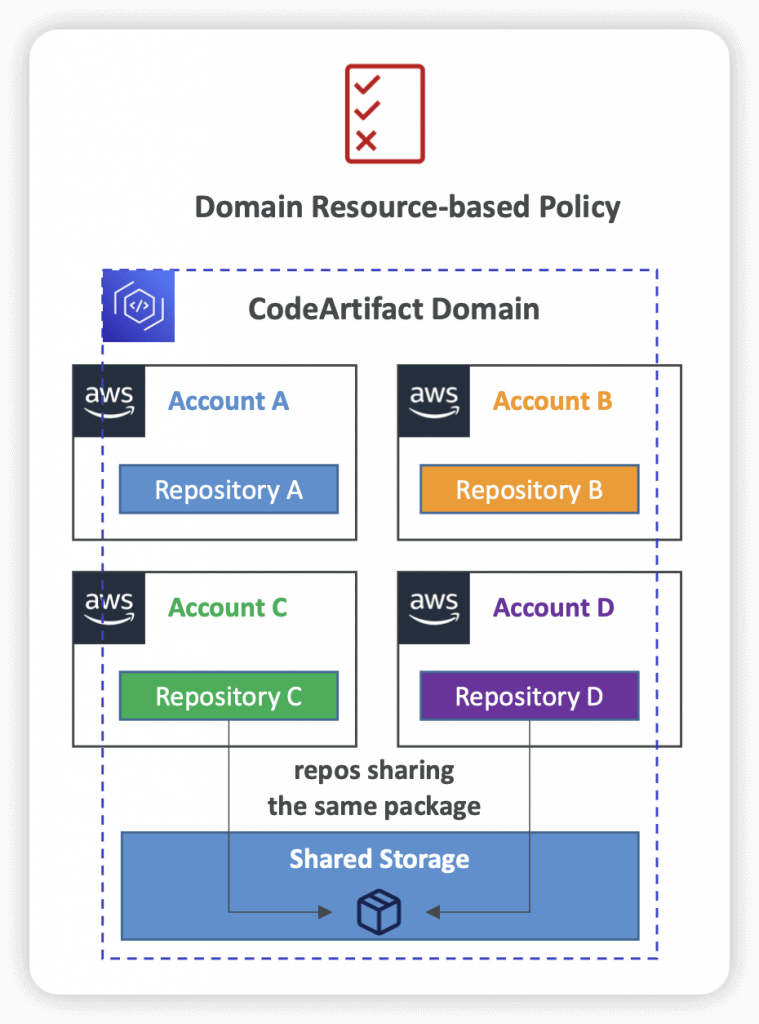

- Resource Policy

- Can be used to authorize another account to access CodeArtifact

- A given principal can either read all the packages in a repository or none of them

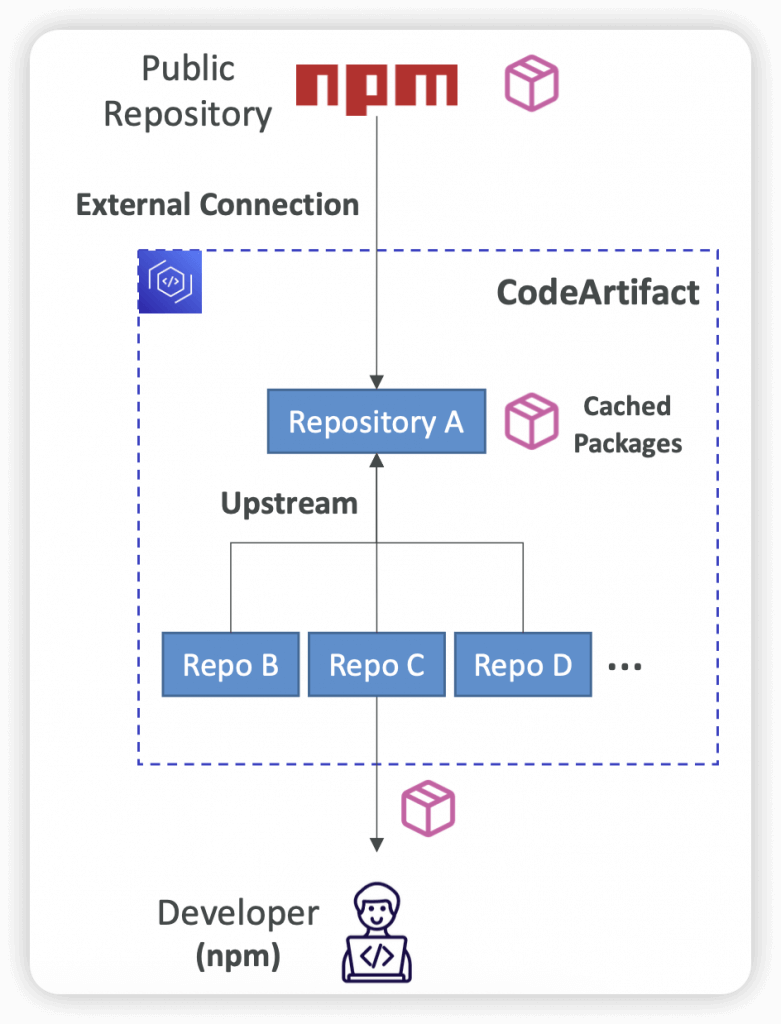

- Upstream Repositories

- A CodeArtifact repository can have other CodeArtifact repositories as Upstream Repositories

- So it works as a proxy, to keep the “depedencies” cached

- Allows a package manager client to access the packages that are contained in more than one repository using a single repository endpoint

- Up to 10 Upstream Repositories

- Only one external connection

- A CodeArtifact repository can have other CodeArtifact repositories as Upstream Repositories

- External Connection

- An External Connection is a connection between a CodeArtifact Repository and an external/public repository (e.g., Maven, npm, PyPI, NuGet…)

- A repository has a maximum of 1 external connection

- Create many repositories for many external connections

- Example – Connect to npmjs.com

- Configure one CodeArtifact Repository in your domain with an external connection to npmjs.com

- Configure all the other repositories with an upstream to it

- Packages fetched from npmjs.com are cached in the Upstream

- Example – Connect to npmjs.com

- Retention

- If a requested package version is found in an Upstream Repository, a reference to it is retained and is always available from the Downstream Repository

- The retained package version is not affected by changes to the Upstream Repository (deleting it, updating the package…)

- Intermediate repositories do not keep the package

- Example – Fetching Package from npmjs.com

- Package Manager connected to Repository A requests the package Lodash v4.17.20

- The package version is not present in any of the three repositories

- The package version will be fetched from npmjs.com

- When Lodash 4.17.20 is fetched, it will be retained in:

- Repository A – the most-downstream repository

- Repository C – has the external connection to npmjs.com

- The Package version will not be retained in Repository B as that is an intermediate Repository

- Example – Fetching Package from npmjs.com

- Domains

- Deduplicated Storage – asset only needs to be stored once in a domain, even if it’s available in many repositories (only pay once for storage)

- Fast Copying – only metadata record are updated when you pull packages from an Upstream CodeArtifact Repository into a Downstream

- Easy Sharing Across Repositories and Teams – all the assets and metadata in a domain are encrypted with a single AWS KMS Key

- Apply Policy Across Multiple Repositories – domain administrator can apply policy across the domain such as:

- Restricting which accounts have access to repositories in the domain

- Who can configure connections to public repositories to use as sources of packages

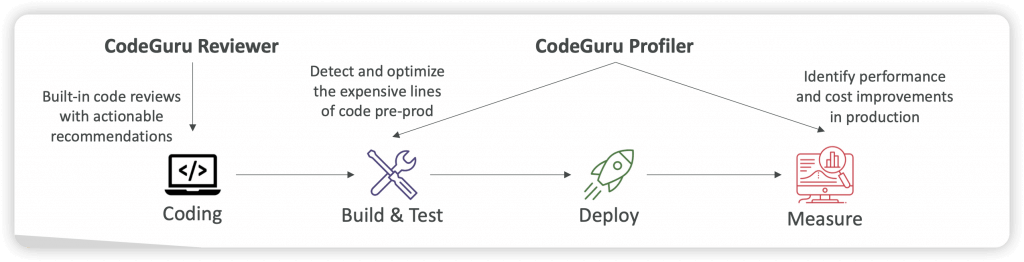

AWS CodeGuru

- a developer tool that provides intelligent recommendations to improve the quality of your codebase and for identifying an application’s most “expensive” lines of code in terms of resource intensiveness, CPU performance, and code efficiency

- using Machine Learning for automated code reviews and application performance recommends

- CodeGuru Reviewer – identify critical issues, security vulnerabilities, and hard-to-find bugs

- automated code reviews for static code analysis (development)

- Example: common coding best practices, resource leaks, security detection, input validation

- Uses Machine Learning and automated reasoning

- CodeGuru Reviewer Secrets Detector

- Uses ML to identify hardcoded secrets embedded in your code (e.g., passwords, API keys, credentials, SSH keys…)

- Besides scanning code, it scans configuration and documentation files

- Suggests remediation to automatically protect your secrets with Secrets Manager

- Secrets Manager, a managed service that lets you securely and automatically store, rotate, manage, and retrieve credentials, API keys, and all sorts of secrets.

- Parameter Store typically does not provide automatic secrets rotation

- CodeGuru Profiler

- visibility/recommendations about application performance during runtime (production)

- Example: identify if your application is consuming excessive CPU capacity on a logging routine

- Features:

- Identify and remove code inefficiencies

- Improve application performance (e.g., reduce CPU utilization)

- Decrease compute costs

- Provides heap summary (identify which objects using up memory)

- Anomaly Detection

- Support applications running on AWS or on-premise

- Minimal overhead on application

- Integrate and apply CodeGuru Profiler to Lambda functions either using:

- Function Decorator @with_lambda_profiler

- Add codeguru_profiler_agent dependency to your Lambda function .zip file oruse Lambda Layers

- Enable Profiling in the Lambda function configuration

- Function Decorator @with_lambda_profiler

- CodeGuru Reviewer – identify critical issues, security vulnerabilities, and hard-to-find bugs

- Supports Java and Python

- CodeGuru Agent

- MaxStackDepth – the max depth of chain on method call

- MemoryUsageLimitPercent

- MinimumTimeForReportingInMilliseconds

- ReportingIntervalInMilliseconds

- SamplingIntervalInMilliseconds

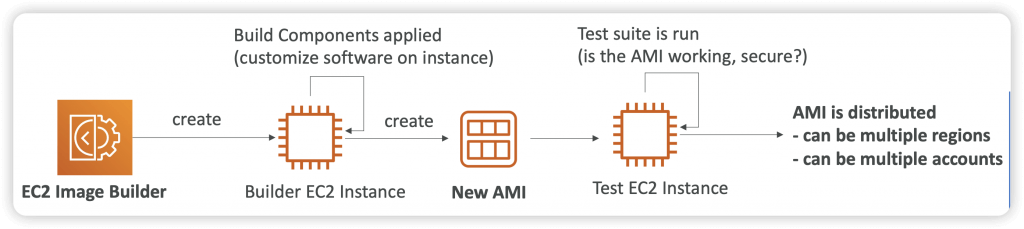

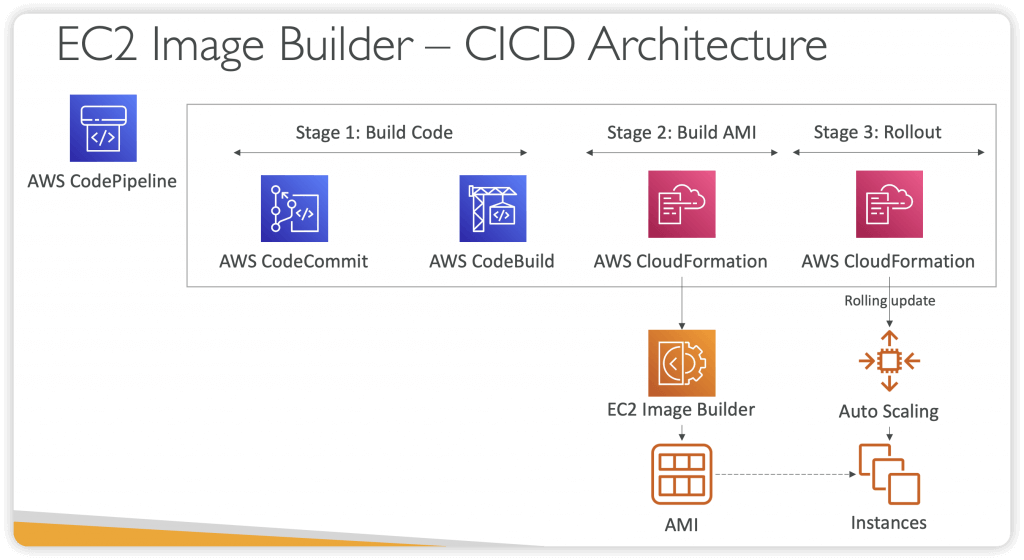

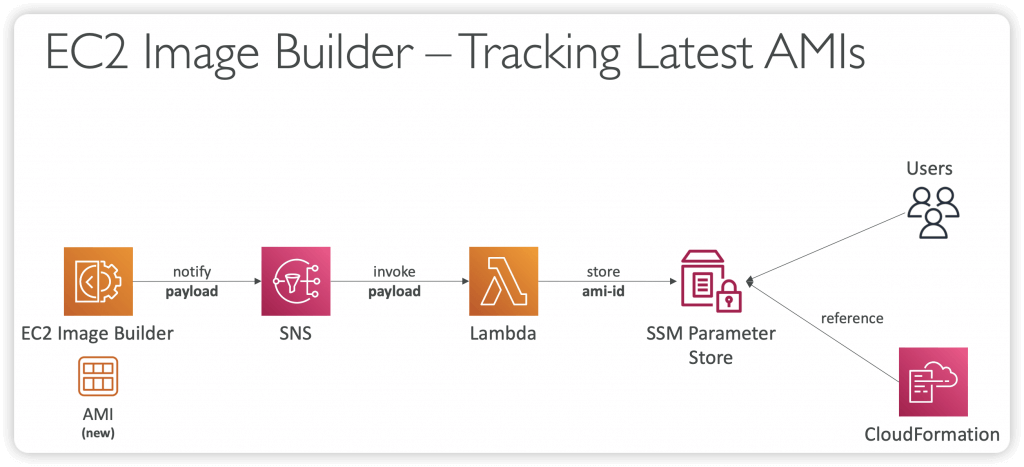

EC2 Image Builder

- Used to automate the creation of Virtual Machines or container images

- => Automate the creation, maintain, validate and test EC2 AMIs

- Can be run on a schedule (weekly, whenever packages are updated, etc…)

- Free service (only pay for the underlying resources)

- Can publish AMI to multiple regions and multiple accounts

- Use AWS Resource Access Manager (RAM) to share Images, Recipes, and Components across AWS accounts or through AWS Organization

| Parameters | AWS EC2 Image Builder | Packer |

|---|---|---|

| Tool Type | Fully managed service by AWS | Open-source tool by HashiCorp |

| Supported Platforms | Primarily for AWS (EC2 AMIs) with integration into AWS services | Multi-cloud (AWS, Azure, GCP), on-premise, and container platforms |

| Customization | Limited to AWS-managed pipelines, some customization possible | Fully customizable, supports a wide range of provisioners like Ansible, Chef, Shell |

| Ease of Use | User-friendly, integrated with AWS services, no manual server setup | Requires manual configuration, more complex setup but highly flexible |

| Cost | No additional cost beyond the AWS resources used | Free (open-source), but costs may arise from cloud usage during image builds |

| Automation | Fully automated AMI creation and distribution pipelines | Can be automated but requires more manual setup and scripting for pipelines |

| Testing & Validation | Integrated with AWS services like CloudWatch and Systems Manager for validation | Requires custom scripting or external tools for validation and testing |

| Version Control | Provides version control for image pipelines within the AWS ecosystem | Supports versioning of templates but requires manual version control of scripts |

| Multi-environment Support | Primarily focused on AWS environments | Supports multiple cloud environments, containers, and virtualization platforms |

| Parallel Builds | Limited to AWS resources; no built-in multi-cloud capability | Supports parallel image creation for multiple environments simultaneously |

| Security & Compliance | Built-in security updates and compliance checks via AWS services | Requires manual setup of security updates and compliance integration |

| Integration with IaC | Native integration with AWS services like CloudFormation | Integrates with Terraform, making it versatile across platforms |

REPO

A repository is the fundamental version control object in GitHub or GitLab. It’s where you securely store code and files for your project. It also stores your project history, from the first commit through the latest changes. You can share your repository with other users so you can work together on a project. In GitHub and GitLab, you can set up notifications to configure notifications so that repository users receive emails about events (for example, another user commenting on code). You can change default settings to customize the default settings for your repository. You can browse contents to easily navigate and browse the contents of your repository. You can create triggers to set up triggers so that code pushes or other events trigger actions, such as emails or code functions. You can also configure a repository on your local computer (a local repo) to push your changes to more than one repository.

In designing your CI/CD process in AWS, you can use a single repository in GitHub (or GitLab) and create different branches for development, master, and release. You can use CodeBuild to build your application and run tests to verify that all of the core features of your application are working. For deployment, you can either select an in-place or blue/green deployment using CodeDeploy.